arielreplicate/deoldify_image ❓🖼️🔢 → 🖼️

About

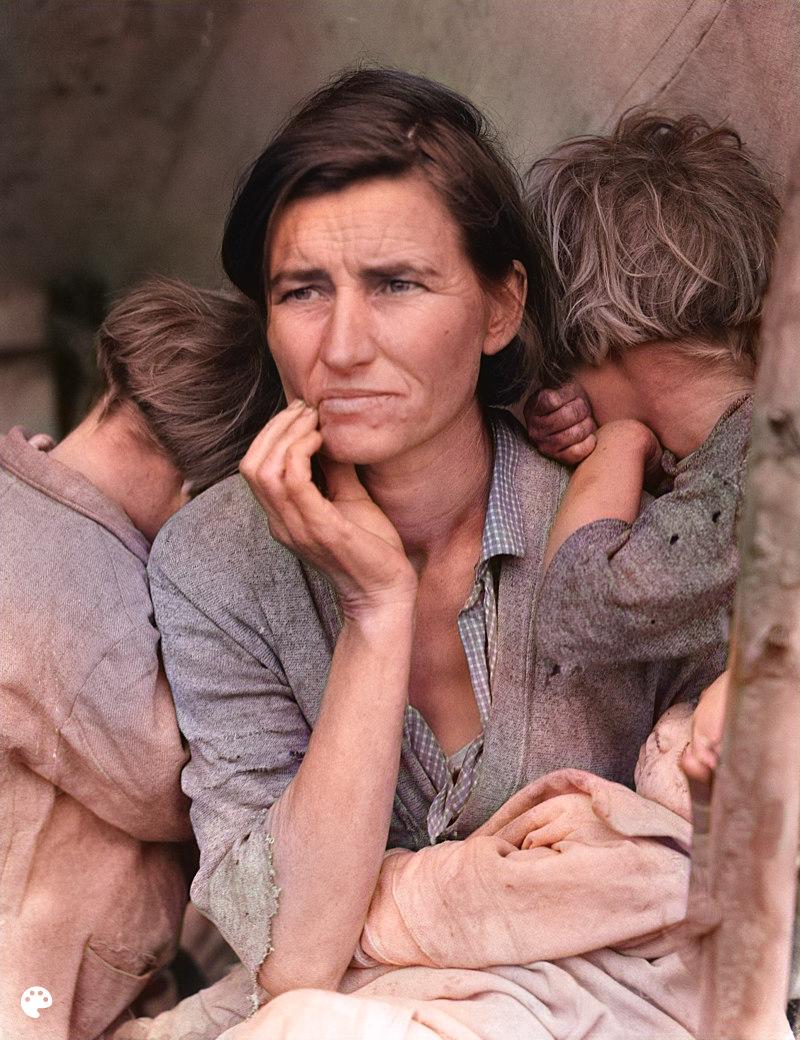

Add colours to old images

Example Output

Output

Performance Metrics

27.55s

Prediction Time

27.59s

Total Time

All Input Parameters

{

"model_name": "Artistic",

"input_image": "https://replicate.delivery/pbxt/I9uDZgopnhz6X956zgaBoorFWbUmu5HHDyjkd3BY3ZnxVAdu/1.jpg",

"render_factor": 35

}

Input Parameters

- model_name (required)

- Which model to use: Artistic has more vibrant color but may leave important parts of the image gray.Stable is better for nature scenery and is less prone to leaving gray human parts

- input_image (required)

- Path to an image

- render_factor

- The default value of 35 has been carefully chosen and should work -ok- for most scenarios (but probably won't be the -best-). This determines resolution at which the color portion of the image is rendered. Lower resolution will render faster, and colors also tend to look more vibrant. Older and lower quality images in particular will generally benefit by lowering the render factor. Higher render factors are often better for higher quality images, but the colors may get slightly washed out.

Output Schema

Output

Example Execution Logs

/root/.pyenv/versions/3.8.16/lib/python3.8/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead. warnings.warn( /root/.pyenv/versions/3.8.16/lib/python3.8/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet34_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet34_Weights.DEFAULT` to get the most up-to-date weights. warnings.warn(msg)

Version Details

- Version ID

0da600fab0c45a66211339f1c16b71345d22f26ef5fea3dca1bb90bb5711e950- Version Created

- February 1, 2023