ayushunleashed/minimax-remover 🔢🖼️ → 🖼️

About

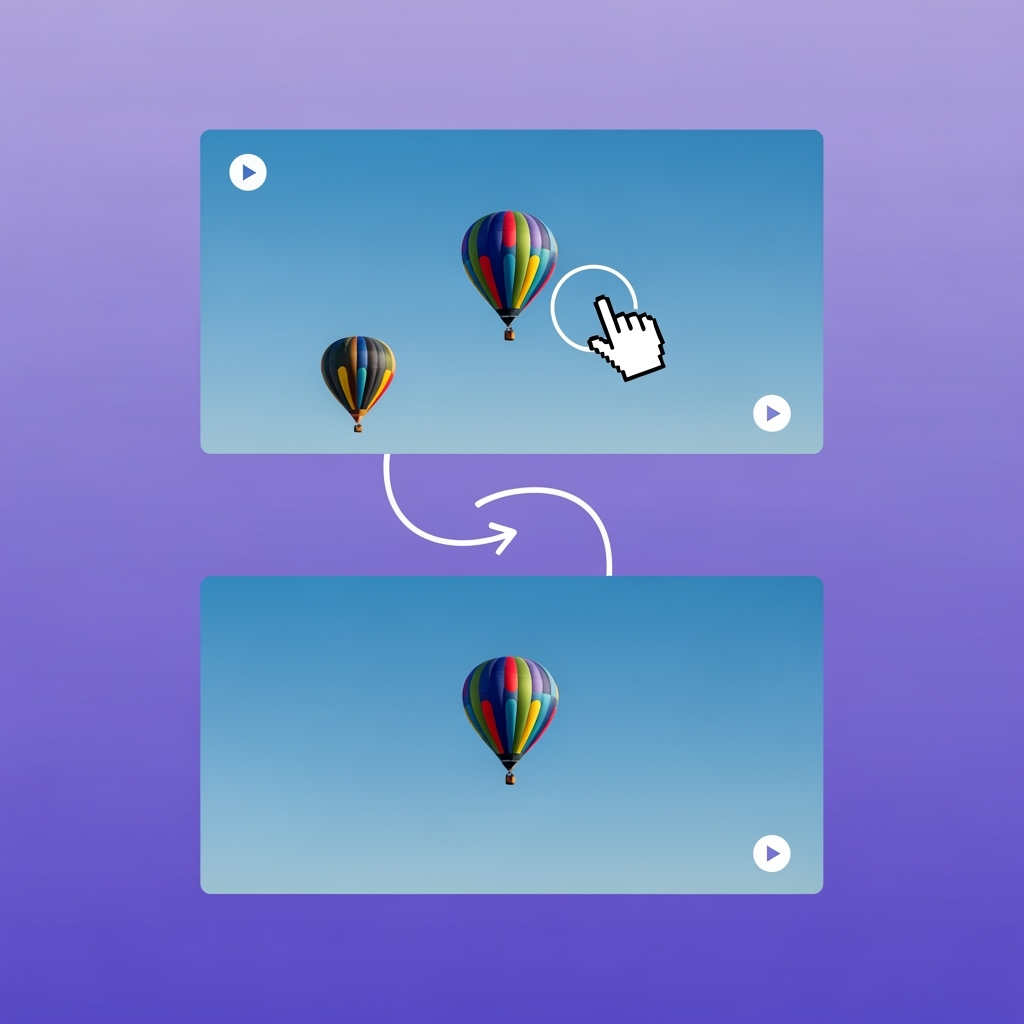

Remove any object from video - fast.

Example Output

Output

Performance Metrics

4.92s

Prediction Time

124.17s

Total Time

All Input Parameters

{

"fps": -1,

"mask": "https://replicate.delivery/pbxt/JgGNe7IjsavH2is00AVgbEwWroEtseid6f3HZIHI7MJH9Num/bmx-trees-mask4.mp4",

"video": "https://replicate.delivery/pbxt/JgGNdl0r9V14YxgJ4JlsCz71rSU9y8NJD9RDDdJo2Ua3r8C7/bmx-trees2.mp4",

"width": -1,

"height": -1,

"num_frames": -1,

"num_inference_steps": 6,

"mask_dilation_iterations": 8

}

Input Parameters

- fps

- Output video FPS (-1 = same as original video)

- mask (required)

- Mask video file where white areas indicate objects to remove. See examples: https://replicate.com/ayushunleashed/minimax-remover/readme

- seed

- Random seed for reproducible results (leave blank for random)

- video (required)

- Input video file with objects to be removed

- width

- Output video width (-1 = same as original video, auto-scaled to max 1920px if needed)

- height

- Output video height (-1 = same as original video, auto-scaled to max 1920px if needed)

- num_frames

- Number of frames to process (-1 = same as original video)

- num_inference_steps

- Number of denoising steps (higher = better quality, slower. 6=fast, 8=balanced, 12=high quality)

- mask_dilation_iterations

- Mask expansion iterations for robust removal (higher = more thorough removal)

Output Schema

Output

Example Execution Logs

Using seed: 6788 Validating input videos... 📹 Processing 80 frames at 432x240 🎬 Output FPS: 24 (original: 24.0) ⚙️ Quality: 6 inference steps 🎯 Mask dilation: 8 iterations Loading original video and mask... Loaded 80 frames from 80 total frames Loaded 80 mask frames Video shape: torch.Size([80, 240, 432, 3]) Mask shape: torch.Size([80, 240, 432, 1]) Running MiniMax-Remover inference... 0%| | 0/6 [00:00<?, ?it/s] 17%|█▋ | 1/6 [00:00<00:02, 2.30it/s] 33%|███▎ | 2/6 [00:00<00:01, 2.94it/s] 50%|█████ | 3/6 [00:00<00:00, 3.15it/s] 67%|██████▋ | 4/6 [00:01<00:00, 3.34it/s] 83%|████████▎ | 5/6 [00:01<00:00, 3.45it/s] 100%|██████████| 6/6 [00:01<00:00, 3.52it/s] 100%|██████████| 6/6 [00:01<00:00, 3.30it/s] Inference completed successfully! It is recommended to use `export_to_video` with `imageio` and `imageio-ffmpeg` as a backend. These libraries are not present in your environment. Attempting to use legacy OpenCV backend to export video. Support for the OpenCV backend will be deprecated in a future Diffusers version ✅ Video saved to: /tmp/tmpril9jgvo/output.mp4 📊 Output: 77 frames at 24 FPS

Version Details

- Version ID

f486e4c1469ce3d5042ad74ab06b16138ae228ddb49b54ce050a14cb2f1beb58- Version Created

- June 15, 2025