baaivision/emu3-chat 📝🖼️🔢 → 📝

About

Emu3-Chat for vision-language understanding

Example Output

Output

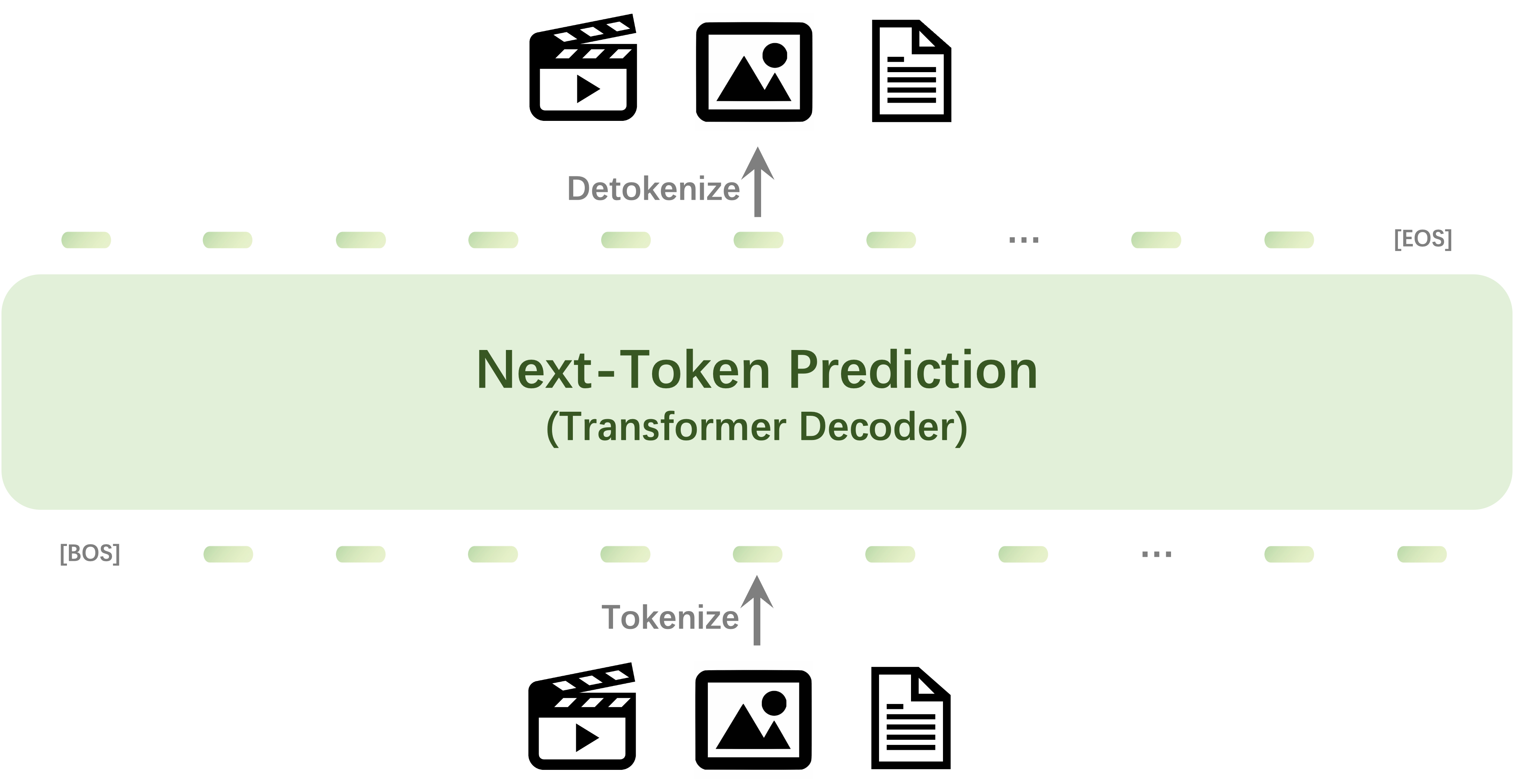

The image depicts a graphical representation of a process involving a "Next-Token Prediction" system. The process is encapsulated within a rectangular box with rounded corners, which is labeled "Next-Token Prediction (Transformer Decoder)." The box is divided into two main sections: the upper section contains the title, while the lower section shows the steps involved in the process.

The upper section of the box has a light green background with black text. The title reads "Next-Token Prediction (Transformer Decoder)" and is centered within the box. Below the title, there is an icon representing a person, which likely symbolizes the human element or user interface of the system. To the right of the person icon, there is an upward arrow, indicating an upward trend or progression.

The lower section of the box has a darker green background with white text and icons. The text reads "Tokenize" and is positioned to the right of the person icon. Below the text, there is an icon representing a document or file, which suggests that the process involves tokenizing text data. The overall layout of the image is clean and organized, with clear demarcations between the different components of the process.

Analysis and Description:

Title and Title: The title "Next-Token Prediction (Transformer Decoder)" indicates that the system is designed to predict next tokens in a sequence, likely for a natural language processing (NLP) task. The term "Transformer Decoder" suggests that this system uses a transformer architecture, which is a type of neural network architecture that has become popular in NLP for its ability to process sequential data.

User Interface: The icons and text are placed in a way that guides the user through the process. The person icon likely represents the user interface, while the upward arrow and document icon suggest the steps involved in tokenization and document handling.

Steps Involved:

- Tokenization: The process of converting text data into tokens, which are the basic units of text in NLP models.

- Document Handling: The system likely handles documents or files, which are used to store and manage the tokens.

Design Elements: The use of a rectangular box with rounded corners and a clear, organized layout makes the process easy to understand and navigate. The light green background for the title and the darker green background for the steps provides a visual distinction between the title and the steps, ensuring that the user can focus on the process without confusion.

Functionality: The system seems to be designed for efficiency and ease of use, as indicated by the clear and concise labeling of the steps. The use of a transformer architecture suggests that it is capable of handling complex text data, which is essential for NLP tasks.

Conclusion:

The image represents a detailed process for predicting next tokens in a sequence using a transformer-based transformer decoder system. The clear and organized layout, combined with the use of icons and text, makes it easy for users to understand and interact with the system. The steps involved in tokenization and document handling are clearly outlined, indicating a well-thought-out design for ease of use and efficiency.

Performance Metrics

All Input Parameters

{

"text": "Please describe the image.",

"image": "https://replicate.delivery/pbxt/Li3FLacLbDi0oQJNea3ijGncsfWeCVZrnHZgbffYu6k3WZ3v/arch.png",

"top_p": 0.9,

"temperature": 0.7,

"max_new_tokens": 1024

}

Input Parameters

- text

- Input prompt

- image

- top_p

- Controls diversity of the output. Valid when temperature > 0. Lower values make the output more focused, higher values make it more diverse.

- temperature

- Controls randomness. Lower values make the model more deterministic, higher values make it more random.

- max_new_tokens

- Maximum number of tokens to generate

Output Schema

Output

Example Execution Logs

/root/.pyenv/versions/3.11.10/lib/python3.11/site-packages/transformers/generation/configuration_utils.py:567: UserWarning: `do_sample` is set to `False`. However, `temperature` is set to `0.7` -- this flag is only used in sample-based generation modes. You should set `do_sample=True` or unset `temperature`. warnings.warn( /root/.pyenv/versions/3.11.10/lib/python3.11/site-packages/transformers/generation/configuration_utils.py:572: UserWarning: `do_sample` is set to `False`. However, `top_p` is set to `0.9` -- this flag is only used in sample-based generation modes. You should set `do_sample=True` or unset `top_p`. warnings.warn( The `seen_tokens` attribute is deprecated and will be removed in v4.41. Use the `cache_position` model input instead.

Version Details

- Version ID

52034986454b08979c0fcb855175ec26a2fd8d40b3ac90913275917c504ea7a4- Version Created

- September 29, 2024