dribnet/pixray-text2pixel ❓📝🔢 → 🖼️

About

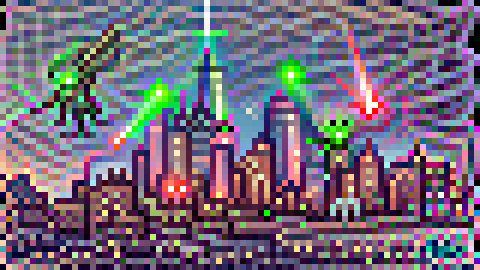

Turn any description into pixel art

Example Output

Output

[object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object][object Object]

Performance Metrics

442.65s

Total Time

All Input Parameters

{

"aspect": "widescreen",

"prompts": "Aliens destroying NYC skyline with lasers. #pixelart",

"pixel_scale": 1

}

Input Parameters

- aspect

- wide or narrow

- prompts

- text prompt

- pixel_scale

- bigger pixels

Output Schema

Output

Example Execution Logs

---> BasePixrayPredictor Predict Using seed: 3244908265805850817 Running pixeldrawer with 80x45 grid Using device: cuda:0 Optimising using: Adam Using text prompts: ['Aliens destroying NYC skyline with lasers. #pixelart'] 0it [00:00, ?it/s] /root/.pyenv/versions/3.8.12/lib/python3.8/site-packages/torch/nn/functional.py:3609: UserWarning: Default upsampling behavior when mode=bilinear is changed to align_corners=False since 0.4.0. Please specify align_corners=True if the old behavior is desired. See the documentation of nn.Upsample for details. warnings.warn( iter: 0, loss: 2.98, losses: 0.98, 0.0797, 0.897, 0.0479, 0.925, 0.0489 (-0=>2.978) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 10, loss: 2.66, losses: 0.904, 0.0797, 0.791, 0.0474, 0.793, 0.0479 (-0=>2.663) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 20, loss: 2.55, losses: 0.861, 0.083, 0.75, 0.0482, 0.757, 0.0482 (-2=>2.536) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 30, loss: 2.41, losses: 0.812, 0.0844, 0.711, 0.0496, 0.705, 0.0487 (-2=>2.368) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 40, loss: 2.28, losses: 0.761, 0.0877, 0.662, 0.0538, 0.664, 0.0509 (-2=>2.276) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 50, loss: 2.33, losses: 0.793, 0.0868, 0.667, 0.0534, 0.68, 0.05 (-5=>2.23) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 60, loss: 2.16, losses: 0.725, 0.0875, 0.612, 0.0578, 0.626, 0.0523 (-0=>2.162) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 70, loss: 2.13, losses: 0.715, 0.0874, 0.601, 0.0588, 0.61, 0.0538 (-0=>2.126) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 80, loss: 2.12, losses: 0.71, 0.0884, 0.599, 0.0585, 0.607, 0.0546 (-2=>2.117) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 90, loss: 2.09, losses: 0.705, 0.0881, 0.592, 0.0599, 0.591, 0.0552 (-2=>2.089) 0it [00:00, ?it/s] 0it [00:12, ?it/s] 0it [00:00, ?it/s] iter: 100, loss: 2.09, losses: 0.702, 0.0885, 0.588, 0.0596, 0.594, 0.0547 (-0=>2.087) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 110, loss: 2.08, losses: 0.7, 0.0874, 0.584, 0.0601, 0.59, 0.0554 (-0=>2.076) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 120, loss: 2.19, losses: 0.744, 0.0869, 0.618, 0.0577, 0.628, 0.0533 (-10=>2.076) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 130, loss: 2.08, losses: 0.698, 0.0875, 0.588, 0.0592, 0.588, 0.055 (-0=>2.076) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 140, loss: 2.2, losses: 0.752, 0.0879, 0.618, 0.0573, 0.628, 0.0532 (-3=>2.065) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 150, loss: 2.17, losses: 0.74, 0.0879, 0.613, 0.0576, 0.621, 0.054 (-13=>2.065) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 160, loss: 2.05, losses: 0.687, 0.0876, 0.575, 0.0612, 0.582, 0.0566 (-0=>2.051) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 170, loss: 2.06, losses: 0.696, 0.0881, 0.575, 0.061, 0.58, 0.0562 (-2=>2.044) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 180, loss: 2.04, losses: 0.683, 0.0891, 0.571, 0.0618, 0.574, 0.0569 (-0=>2.036) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 190, loss: 2.05, losses: 0.687, 0.0883, 0.575, 0.0608, 0.579, 0.0561 (-10=>2.036) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 200, loss: 2.04, losses: 0.683, 0.0887, 0.579, 0.0608, 0.575, 0.0566 (-20=>2.036) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 210, loss: 2.15, losses: 0.731, 0.0867, 0.606, 0.0583, 0.611, 0.0543 (-2=>2.024) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 220, loss: 2.03, losses: 0.681, 0.0889, 0.568, 0.0617, 0.573, 0.0571 (-12=>2.024) 0it [00:00, ?it/s] Dropping learning rate 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 230, loss: 2.17, losses: 0.735, 0.087, 0.615, 0.0577, 0.621, 0.0537 (-4=>2.02) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 240, loss: 2.15, losses: 0.728, 0.0867, 0.61, 0.0584, 0.613, 0.0539 (-14=>2.02) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 250, loss: 2.14, losses: 0.725, 0.0876, 0.603, 0.059, 0.607, 0.0547 (-24=>2.02) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 260, loss: 2.02, losses: 0.679, 0.0877, 0.568, 0.0618, 0.566, 0.0576 (-7=>2.015) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 270, loss: 2.1, losses: 0.712, 0.0872, 0.592, 0.0607, 0.597, 0.0554 (-17=>2.015) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 280, loss: 2.15, losses: 0.729, 0.0866, 0.608, 0.0584, 0.618, 0.0542 (-27=>2.015) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 290, loss: 2.16, losses: 0.723, 0.0869, 0.614, 0.0591, 0.619, 0.0542 (-1=>2.013) 0it [00:00, ?it/s] 0it [00:13, ?it/s] 0it [00:00, ?it/s] iter: 300, finished (-6=>2.008) 0it [00:00, ?it/s] 0it [00:00, ?it/s]

Version Details

- Version ID

d838a15c29f59f286c7f1caaf71db26f21f184419e80309c78a2689de319c6af- Version Created

- October 27, 2022