fofr/hunyuan-take-on-me 🔢📝❓✓🖼️ → 🖼️

About

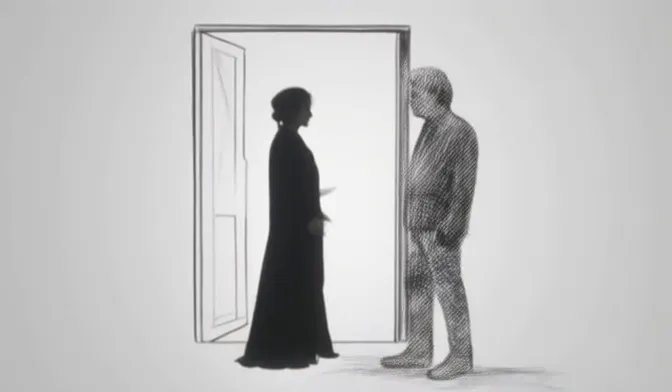

Hunyuan fine-tuned on 3s clips from A-ha's Take on Me music video, use TKONME trigger

Example Output

Prompt:

"In the style of TKONME, this video sequence is a sketched animations depicting a young woman with hair in a bun and loose strands, she looks up."

Output

Performance Metrics

212.96s

Prediction Time

268.13s

Total Time

All Input Parameters

{

"crf": 19,

"steps": 50,

"width": 640,

"height": 360,

"prompt": "In the style of TKONME, this video sequence is a sketched animations depicting a young woman with hair in a bun and loose strands, she looks up.",

"lora_url": "",

"flow_shift": 9,

"frame_rate": 24,

"num_frames": 85,

"force_offload": true,

"lora_strength": 0.85,

"guidance_scale": 6,

"denoise_strength": 1

}

Input Parameters

- crf

- CRF (quality) for H264 encoding. Lower values = higher quality.

- seed

- Set a seed for reproducibility. Random by default.

- steps

- Number of diffusion steps.

- width

- Width for the generated video.

- height

- Height for the generated video.

- prompt

- The text prompt describing your video scene.

- lora_url

- A URL pointing to your LoRA .safetensors file or a Hugging Face repo (e.g. 'user/repo' - uses the first .safetensors file).

- scheduler

- Algorithm used to generate the video frames.

- flow_shift

- Video continuity factor (flow).

- frame_rate

- Video frame rate.

- num_frames

- How many frames (duration) in the resulting video.

- enhance_end

- When to end enhancement in the video. Must be greater than enhance_start.

- enhance_start

- When to start enhancement in the video. Must be less than enhance_end.

- force_offload

- Whether to force model layers offloaded to CPU.

- lora_strength

- Scale/strength for your LoRA.

- enhance_double

- Apply enhancement across frame pairs.

- enhance_single

- Apply enhancement to individual frames.

- enhance_weight

- Strength of the video enhancement effect.

- guidance_scale

- Overall influence of text vs. model.

- denoise_strength

- Controls how strongly noise is applied each step.

- replicate_weights

- A .tar file containing LoRA weights from replicate.

Output Schema

Output

Example Execution Logs

Random seed set to: 171269911

Checking inputs

====================================

Checking weights

✅ hunyuan_video_vae_bf16.safetensors exists in ComfyUI/models/vae

✅ hunyuan_video_720_fp8_e4m3fn.safetensors exists in ComfyUI/models/diffusion_models

====================================

Running workflow

[ComfyUI] got prompt

Executing node 7, title: HunyuanVideo VAE Loader, class type: HyVideoVAELoader

[ComfyUI] Loading text encoder model (clipL) from: /src/ComfyUI/models/clip/clip-vit-large-patch14

Executing node 16, title: (Down)Load HunyuanVideo TextEncoder, class type: DownloadAndLoadHyVideoTextEncoder

[ComfyUI] Text encoder to dtype: torch.float16

[ComfyUI] Loading tokenizer (clipL) from: /src/ComfyUI/models/clip/clip-vit-large-patch14

[ComfyUI] Loading text encoder model (llm) from: /src/ComfyUI/models/LLM/llava-llama-3-8b-text-encoder-tokenizer

[ComfyUI]

[ComfyUI] Loading checkpoint shards: 0%| | 0/4 [00:00<?, ?it/s]

[ComfyUI] Loading checkpoint shards: 25%|██▌ | 1/4 [00:00<00:01, 1.58it/s]

[ComfyUI] Loading checkpoint shards: 50%|█████ | 2/4 [00:01<00:01, 1.63it/s]

[ComfyUI] Loading checkpoint shards: 75%|███████▌ | 3/4 [00:01<00:00, 1.66it/s]

[ComfyUI] Loading checkpoint shards: 100%|██████████| 4/4 [00:01<00:00, 2.38it/s]

[ComfyUI] Loading checkpoint shards: 100%|██████████| 4/4 [00:01<00:00, 2.04it/s]

[ComfyUI] Text encoder to dtype: torch.float16

[ComfyUI] Loading tokenizer (llm) from: /src/ComfyUI/models/LLM/llava-llama-3-8b-text-encoder-tokenizer

Executing node 30, title: HunyuanVideo TextEncode, class type: HyVideoTextEncode

[ComfyUI] llm prompt attention_mask shape: torch.Size([1, 161]), masked tokens: 34

[ComfyUI] clipL prompt attention_mask shape: torch.Size([1, 77]), masked tokens: 35

Executing node 41, title: HunyuanVideo Lora Select, class type: HyVideoLoraSelect

Executing node 1, title: HunyuanVideo Model Loader, class type: HyVideoModelLoader

[ComfyUI] model_type FLOW

[ComfyUI] Using accelerate to load and assign model weights to device...

[ComfyUI] Loading LoRA: lora_comfyui with strength: 0.85

[ComfyUI] Requested to load HyVideoModel

[ComfyUI] Loading 1 new model

[ComfyUI] loaded completely 0.0 12555.953247070312 True

[ComfyUI] Input (height, width, video_length) = (360, 640, 85)

Executing node 3, title: HunyuanVideo Sampler, class type: HyVideoSampler

[ComfyUI] Sampling 85 frames in 22 latents at 640x368 with 50 inference steps

[ComfyUI] Scheduler config: FrozenDict([('num_train_timesteps', 1000), ('shift', 9.0), ('reverse', True), ('solver', 'euler'), ('n_tokens', None), ('_use_default_values', ['num_train_timesteps', 'n_tokens'])])

[ComfyUI]

[ComfyUI] 0%| | 0/50 [00:00<?, ?it/s]

[ComfyUI] 2%|▏ | 1/50 [00:02<02:09, 2.64s/it]

[ComfyUI] 4%|▍ | 2/50 [00:06<02:30, 3.13s/it]

[ComfyUI] 6%|▌ | 3/50 [00:09<02:34, 3.28s/it]

[ComfyUI] 8%|▊ | 4/50 [00:13<02:34, 3.36s/it]

[ComfyUI] 10%|█ | 5/50 [00:16<02:32, 3.40s/it]

[ComfyUI] 12%|█▏ | 6/50 [00:19<02:30, 3.42s/it]

[ComfyUI] 14%|█▍ | 7/50 [00:23<02:27, 3.44s/it]

[ComfyUI] 16%|█▌ | 8/50 [00:26<02:24, 3.45s/it]

[ComfyUI] 18%|█▊ | 9/50 [00:30<02:21, 3.46s/it]

[ComfyUI] 20%|██ | 10/50 [00:33<02:18, 3.46s/it]

[ComfyUI] 22%|██▏ | 11/50 [00:37<02:15, 3.47s/it]

[ComfyUI] 24%|██▍ | 12/50 [00:40<02:11, 3.47s/it]

[ComfyUI] 26%|██▌ | 13/50 [00:44<02:08, 3.47s/it]

[ComfyUI] 28%|██▊ | 14/50 [00:47<02:04, 3.47s/it]

[ComfyUI] 30%|███ | 15/50 [00:51<02:01, 3.47s/it]

[ComfyUI] 32%|███▏ | 16/50 [00:54<01:58, 3.47s/it]

[ComfyUI] 34%|███▍ | 17/50 [00:58<01:54, 3.47s/it]

[ComfyUI] 36%|███▌ | 18/50 [01:01<01:51, 3.47s/it]

[ComfyUI] 38%|███▊ | 19/50 [01:05<01:47, 3.47s/it]

[ComfyUI] 40%|████ | 20/50 [01:08<01:44, 3.47s/it]

[ComfyUI] 42%|████▏ | 21/50 [01:12<01:40, 3.47s/it]

[ComfyUI] 44%|████▍ | 22/50 [01:15<01:37, 3.47s/it]

[ComfyUI] 46%|████▌ | 23/50 [01:19<01:33, 3.47s/it]

[ComfyUI] 48%|████▊ | 24/50 [01:22<01:30, 3.47s/it]

[ComfyUI] 50%|█████ | 25/50 [01:25<01:26, 3.47s/it]

[ComfyUI] 52%|█████▏ | 26/50 [01:29<01:23, 3.47s/it]

[ComfyUI] 54%|█████▍ | 27/50 [01:32<01:19, 3.47s/it]

[ComfyUI] 56%|█████▌ | 28/50 [01:36<01:16, 3.47s/it]

[ComfyUI] 58%|█████▊ | 29/50 [01:39<01:12, 3.47s/it]

[ComfyUI] 60%|██████ | 30/50 [01:43<01:09, 3.47s/it]

[ComfyUI] 62%|██████▏ | 31/50 [01:46<01:06, 3.47s/it]

[ComfyUI] 64%|██████▍ | 32/50 [01:50<01:02, 3.47s/it]

[ComfyUI] 66%|██████▌ | 33/50 [01:53<00:59, 3.47s/it]

[ComfyUI] 68%|██████▊ | 34/50 [01:57<00:55, 3.47s/it]

[ComfyUI] 70%|███████ | 35/50 [02:00<00:52, 3.47s/it]

[ComfyUI] 72%|███████▏ | 36/50 [02:04<00:48, 3.47s/it]

[ComfyUI] 74%|███████▍ | 37/50 [02:07<00:45, 3.47s/it]

[ComfyUI] 76%|███████▌ | 38/50 [02:11<00:41, 3.47s/it]

[ComfyUI] 78%|███████▊ | 39/50 [02:14<00:38, 3.47s/it]

[ComfyUI] 80%|████████ | 40/50 [02:18<00:34, 3.47s/it]

[ComfyUI] 82%|████████▏ | 41/50 [02:21<00:31, 3.47s/it]

[ComfyUI] 84%|████████▍ | 42/50 [02:25<00:27, 3.47s/it]

[ComfyUI] 86%|████████▌ | 43/50 [02:28<00:24, 3.47s/it]

[ComfyUI] 88%|████████▊ | 44/50 [02:31<00:20, 3.47s/it]

[ComfyUI] 90%|█████████ | 45/50 [02:35<00:17, 3.47s/it]

[ComfyUI] 92%|█████████▏| 46/50 [02:38<00:13, 3.47s/it]

[ComfyUI] 94%|█████████▍| 47/50 [02:42<00:10, 3.47s/it]

[ComfyUI] 96%|█████████▌| 48/50 [02:45<00:06, 3.47s/it]

[ComfyUI] 98%|█████████▊| 49/50 [02:49<00:03, 3.48s/it]

[ComfyUI] 100%|██████████| 50/50 [02:52<00:00, 3.47s/it]

[ComfyUI] 100%|██████████| 50/50 [02:52<00:00, 3.46s/it]

[ComfyUI] Allocated memory: memory=12.759 GB

[ComfyUI] Max allocated memory: max_memory=16.810 GB

[ComfyUI] Max reserved memory: max_reserved=18.688 GB

Executing node 5, title: HunyuanVideo Decode, class type: HyVideoDecode

[ComfyUI]

[ComfyUI] Decoding rows: 0%| | 0/2 [00:00<?, ?it/s]

[ComfyUI] Decoding rows: 50%|█████ | 1/2 [00:01<00:01, 1.52s/it]

[ComfyUI] Decoding rows: 100%|██████████| 2/2 [00:02<00:00, 1.27s/it]

[ComfyUI] Decoding rows: 100%|██████████| 2/2 [00:02<00:00, 1.31s/it]

[ComfyUI]

[ComfyUI] Blending tiles: 0%| | 0/2 [00:00<?, ?it/s]

[ComfyUI] Blending tiles: 100%|██████████| 2/2 [00:00<00:00, 28.42it/s]

[ComfyUI]

[ComfyUI] Decoding rows: 0%| | 0/2 [00:00<?, ?it/s]

[ComfyUI] Decoding rows: 50%|█████ | 1/2 [00:00<00:00, 1.18it/s]

[ComfyUI] Decoding rows: 100%|██████████| 2/2 [00:01<00:00, 1.39it/s]

[ComfyUI] Decoding rows: 100%|██████████| 2/2 [00:01<00:00, 1.36it/s]

[ComfyUI]

[ComfyUI] Blending tiles: 0%| | 0/2 [00:00<?, ?it/s]

Executing node 34, title: Video Combine 🎥🅥🅗🅢, class type: VHS_VideoCombine

[ComfyUI] Blending tiles: 100%|██████████| 2/2 [00:00<00:00, 49.09it/s]

[ComfyUI] Prompt executed in 208.11 seconds

outputs: {'34': {'gifs': [{'filename': 'HunyuanVideo_00001.mp4', 'subfolder': '', 'type': 'output', 'format': 'video/h264-mp4', 'frame_rate': 24.0, 'workflow': 'HunyuanVideo_00001.png', 'fullpath': '/tmp/outputs/HunyuanVideo_00001.mp4'}]}}

====================================

HunyuanVideo_00001.png

HunyuanVideo_00001.mp4

Version Details

- Version ID

fe4347d905314abbcb3270b1abd5aa370dff6d61d769b59ea8a91961c40ba420- Version Created

- January 8, 2025