pixray/text2image-future ❓📝 → 🖼️

About

pixray text2image (future branch)

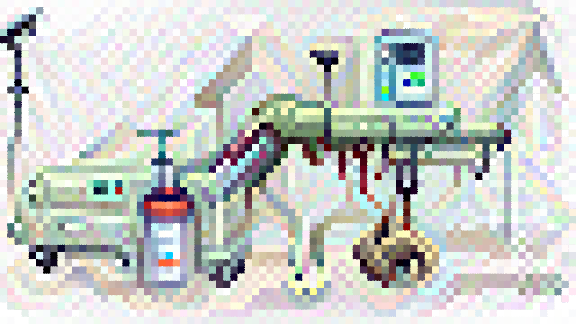

Example Output

Output

Performance Metrics

538.42s

Prediction Time

538.64s

Total Time

All Input Parameters

{

"drawer": "pixel",

"prompts": "evil medical device from a post apocalyptic veterinary clinic #pixelart",

"settings": "# random number seed can be a word or number\nseed: reference\n# higher quality than default\nquality: better\n# smooth out the result a bit\ncustom_loss: smoothness:0.5\n# enable transparency in image\ntransparent: true\n# how much to encourage transparency (can also be negative) \ntransparent_weight: 0.1\n\n"

}

Input Parameters

- drawer

- render engine

- prompts

- text prompt

- settings

- extra settings in `name: value` format. reference: https://dazhizhong.gitbook.io/pixray-docs/docs/primary-settings

Output Schema

Output

Example Execution Logs

---> BasePixrayPredictor Predict Using seed: 3903845079 Running pixeldrawer with 80x45 grid 0%| | 0.00/244M [00:00<?, ?iB/s] 0%|▏ | 1.19M/244M [00:00<00:21, 11.9MiB/s] 5%|█▉ | 12.6M/244M [00:00<00:14, 16.3MiB/s] 14%|█████▏ | 33.6M/244M [00:00<00:09, 22.6MiB/s] 21%|███████▉ | 50.6M/244M [00:00<00:06, 30.6MiB/s] 27%|██████████▍ | 67.0M/244M [00:00<00:04, 40.6MiB/s] 34%|████████████▊ | 82.4M/244M [00:00<00:03, 52.4MiB/s] 44%|████████████████▉ | 106M/244M [00:00<00:02, 68.7MiB/s] 53%|████████████████████▌ | 128M/244M [00:00<00:01, 87.0MiB/s] 61%|████████████████████████▌ | 149M/244M [00:00<00:00, 106MiB/s] 71%|████████████████████████████▍ | 173M/244M [00:01<00:00, 129MiB/s] 79%|███████████████████████████████▊ | 194M/244M [00:01<00:00, 146MiB/s] 88%|███████████████████████████████████▏ | 214M/244M [00:01<00:00, 161MiB/s] 96%|██████████████████████████████████████▍ | 235M/244M [00:01<00:00, 171MiB/s] 100%|████████████████████████████████████████| 244M/244M [00:01<00:00, 187MiB/s] Loaded CLIP RN50: 224x224 and 102.01M params Loaded CLIP ViT-B/32: 224x224 and 151.28M params Loaded CLIP ViT-B/16: 224x224 and 149.62M params Using device: cuda:0 Optimising using: Adam Using text prompts: ['evil medical device from a post apocalyptic veterinary clinic #pixelart'] using custom losses: smoothness:0.5 0it [00:00, ?it/s] iter: 0, loss: 3.23, losses: 1.01, 0.0859, 0.928, 0.0617, 0.904, 0.0643, 0.1, 0.0725 (-0=>3.227) 0it [00:00, ?it/s]/root/.pyenv/versions/3.8.12/lib/python3.8/site-packages/torch/nn/functional.py:3609: UserWarning: Default upsampling behavior when mode=bilinear is changed to align_corners=False since 0.4.0. Please specify align_corners=True if the old behavior is desired. See the documentation of nn.Upsample for details. warnings.warn( 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 10, loss: 2.88, losses: 0.916, 0.0816, 0.799, 0.061, 0.798, 0.0613, 0.0905, 0.0707 (-0=>2.878) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 20, loss: 2.73, losses: 0.874, 0.0823, 0.753, 0.0629, 0.74, 0.0628, 0.0843, 0.0704 (-0=>2.73) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 30, loss: 2.62, losses: 0.848, 0.0824, 0.724, 0.0635, 0.685, 0.0644, 0.0787, 0.0711 (-0=>2.617) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 40, loss: 2.53, losses: 0.818, 0.0824, 0.695, 0.0662, 0.66, 0.0663, 0.0731, 0.0716 (-3=>2.533) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 50, loss: 2.53, losses: 0.815, 0.0837, 0.7, 0.0643, 0.66, 0.0655, 0.0681, 0.0704 (-2=>2.493) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 60, loss: 2.46, losses: 0.786, 0.083, 0.676, 0.0673, 0.648, 0.0676, 0.0642, 0.0714 (-1=>2.428) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 70, loss: 2.46, losses: 0.781, 0.0844, 0.682, 0.0658, 0.648, 0.0672, 0.0608, 0.0695 (-1=>2.412) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 80, loss: 2.46, losses: 0.788, 0.0835, 0.682, 0.0654, 0.645, 0.0668, 0.0579, 0.0716 (-2=>2.376) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 90, loss: 2.41, losses: 0.771, 0.0833, 0.665, 0.067, 0.631, 0.0677, 0.0552, 0.0682 (-3=>2.364) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 100, loss: 2.35, losses: 0.746, 0.0847, 0.65, 0.067, 0.619, 0.0692, 0.053, 0.0624 (-0=>2.352) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 110, loss: 2.35, losses: 0.748, 0.0844, 0.651, 0.067, 0.616, 0.0691, 0.051, 0.0596 (-1=>2.331) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 120, loss: 2.36, losses: 0.756, 0.0844, 0.651, 0.0662, 0.618, 0.069, 0.0491, 0.0617 (-11=>2.331) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 130, loss: 2.36, losses: 0.756, 0.0839, 0.654, 0.0666, 0.622, 0.0688, 0.0472, 0.061 (-6=>2.31) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 140, loss: 2.36, losses: 0.753, 0.0854, 0.652, 0.0665, 0.623, 0.0677, 0.0458, 0.0638 (-6=>2.305) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 150, loss: 2.33, losses: 0.741, 0.0847, 0.646, 0.0665, 0.614, 0.0693, 0.0447, 0.0621 (-5=>2.297) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 160, loss: 2.31, losses: 0.734, 0.0842, 0.641, 0.0675, 0.609, 0.0699, 0.0437, 0.061 (-15=>2.297) 0it [00:00, ?it/s] 0it [00:15, ?it/s] 0it [00:00, ?it/s] iter: 170, loss: 2.3, losses: 0.736, 0.0851, 0.633, 0.0673, 0.606, 0.0703, 0.0428, 0.0597 (-3=>2.289) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 180, loss: 2.31, losses: 0.741, 0.0849, 0.637, 0.0668, 0.61, 0.0689, 0.0418, 0.0622 (-5=>2.28) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 190, loss: 2.32, losses: 0.745, 0.0844, 0.642, 0.0659, 0.613, 0.0683, 0.0409, 0.0629 (-15=>2.28) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 200, loss: 2.26, losses: 0.716, 0.0857, 0.624, 0.0677, 0.599, 0.0703, 0.0401, 0.0618 (-0=>2.265) 0it [00:01, ?it/s] 0it [00:17, ?it/s] 0it [00:00, ?it/s] iter: 210, loss: 2.33, losses: 0.746, 0.085, 0.646, 0.0659, 0.616, 0.0683, 0.0392, 0.0641 (-1=>2.255) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 220, loss: 2.27, losses: 0.724, 0.0844, 0.628, 0.0674, 0.601, 0.0704, 0.0386, 0.0578 (-5=>2.252) Dropping learning rate 0it [00:01, ?it/s] 0it [00:17, ?it/s] 0it [00:00, ?it/s] iter: 230, loss: 2.3, losses: 0.739, 0.085, 0.634, 0.0671, 0.606, 0.0693, 0.0384, 0.0602 (-1=>2.264) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 240, loss: 2.26, losses: 0.716, 0.0849, 0.627, 0.0678, 0.599, 0.07, 0.0383, 0.0565 (-5=>2.25) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 250, loss: 2.25, losses: 0.718, 0.0857, 0.619, 0.0679, 0.592, 0.0703, 0.0382, 0.056 (-0=>2.247) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 260, loss: 2.27, losses: 0.719, 0.0846, 0.628, 0.067, 0.6, 0.0704, 0.0382, 0.0594 (-5=>2.244) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 270, loss: 2.27, losses: 0.724, 0.0859, 0.63, 0.0669, 0.598, 0.0703, 0.0381, 0.0555 (-1=>2.241) 0it [00:01, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 280, loss: 2.26, losses: 0.72, 0.0853, 0.625, 0.0674, 0.597, 0.0703, 0.0381, 0.0581 (-11=>2.241) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 290, loss: 2.25, losses: 0.716, 0.0855, 0.621, 0.0681, 0.593, 0.0701, 0.0381, 0.0574 (-21=>2.241) 0it [00:00, ?it/s] 0it [00:16, ?it/s] 0it [00:00, ?it/s] iter: 300, finished (-31=>2.241) 0it [00:00, ?it/s] 0it [00:00, ?it/s]

Version Details

- Version ID

42615782823e77be5ef6d36270ae021c7b1883a189a9277ef699190eb24fd93a- Version Created

- May 29, 2022