vestigiaproject/fayumportraits 🖼️🔢❓📝✓ → 🖼️

About

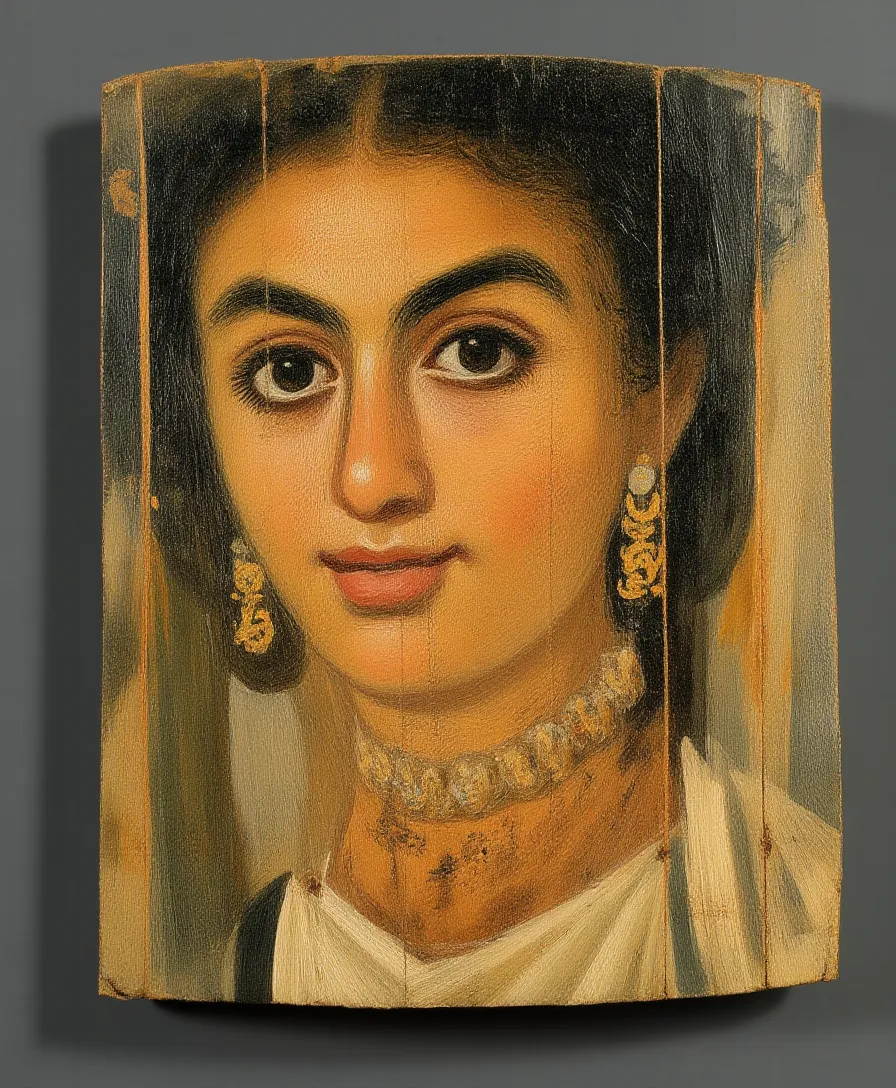

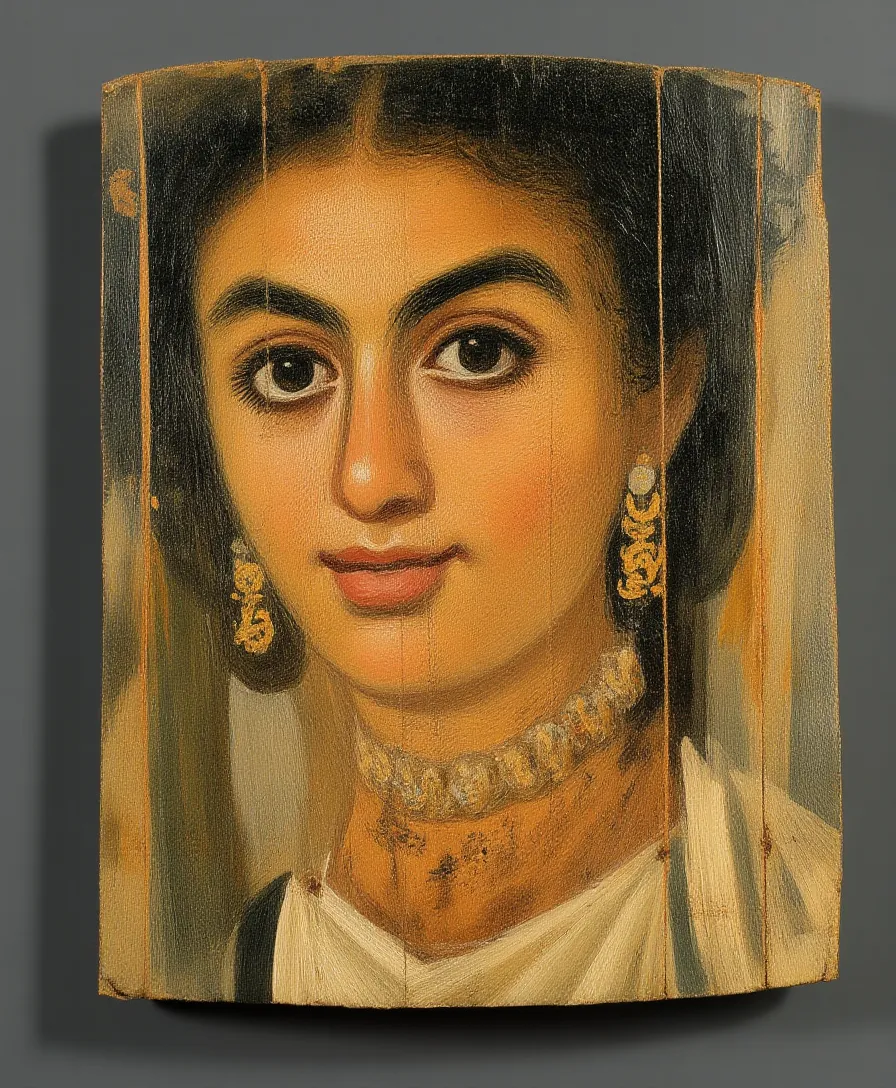

An image model trained on ancient Fayum wood paintings. Use "fayumprtrt" in the prompt.

Example Output

Prompt:

"A Fayum portrait of a woman in the style of fayumprtrt, encaustic on wood panel"

Output

Performance Metrics

9.50s

Prediction Time

9.53s

Total Time

All Input Parameters

{

"model": "dev",

"prompt": "A Fayum portrait of a woman in the style of fayumprtrt, encaustic on wood panel",

"go_fast": true,

"lora_scale": 1,

"megapixels": "1",

"num_outputs": 1,

"aspect_ratio": "4:5",

"output_format": "webp",

"guidance_scale": 1.8,

"output_quality": 80,

"prompt_strength": 0.8,

"extra_lora_scale": 1,

"num_inference_steps": 20

}

Input Parameters

- mask

- Image mask for image inpainting mode. If provided, aspect_ratio, width, and height inputs are ignored.

- seed

- Random seed. Set for reproducible generation

- image

- Input image for image to image or inpainting mode. If provided, aspect_ratio, width, and height inputs are ignored.

- model

- Which model to run inference with. The dev model performs best with around 28 inference steps but the schnell model only needs 4 steps.

- width

- Width of generated image. Only works if `aspect_ratio` is set to custom. Will be rounded to nearest multiple of 16. Incompatible with fast generation

- height

- Height of generated image. Only works if `aspect_ratio` is set to custom. Will be rounded to nearest multiple of 16. Incompatible with fast generation

- prompt (required)

- Prompt for generated image. If you include the `trigger_word` used in the training process you are more likely to activate the trained object, style, or concept in the resulting image.

- go_fast

- Run faster predictions with model optimized for speed (currently fp8 quantized); disable to run in original bf16

- extra_lora

- Load LoRA weights. Supports Replicate models in the format <owner>/<username> or <owner>/<username>/<version>, HuggingFace URLs in the format huggingface.co/<owner>/<model-name>, CivitAI URLs in the format civitai.com/models/<id>[/<model-name>], or arbitrary .safetensors URLs from the Internet. For example, 'fofr/flux-pixar-cars'

- lora_scale

- Determines how strongly the main LoRA should be applied. Sane results between 0 and 1 for base inference. For go_fast we apply a 1.5x multiplier to this value; we've generally seen good performance when scaling the base value by that amount. You may still need to experiment to find the best value for your particular lora.

- megapixels

- Approximate number of megapixels for generated image

- num_outputs

- Number of outputs to generate

- aspect_ratio

- Aspect ratio for the generated image. If custom is selected, uses height and width below & will run in bf16 mode

- output_format

- Format of the output images

- guidance_scale

- Guidance scale for the diffusion process. Lower values can give more realistic images. Good values to try are 2, 2.5, 3 and 3.5

- output_quality

- Quality when saving the output images, from 0 to 100. 100 is best quality, 0 is lowest quality. Not relevant for .png outputs

- prompt_strength

- Prompt strength when using img2img. 1.0 corresponds to full destruction of information in image

- extra_lora_scale

- Determines how strongly the extra LoRA should be applied. Sane results between 0 and 1 for base inference. For go_fast we apply a 1.5x multiplier to this value; we've generally seen good performance when scaling the base value by that amount. You may still need to experiment to find the best value for your particular lora.

- replicate_weights

- Load LoRA weights. Supports Replicate models in the format <owner>/<username> or <owner>/<username>/<version>, HuggingFace URLs in the format huggingface.co/<owner>/<model-name>, CivitAI URLs in the format civitai.com/models/<id>[/<model-name>], or arbitrary .safetensors URLs from the Internet. For example, 'fofr/flux-pixar-cars'

- num_inference_steps

- Number of denoising steps. More steps can give more detailed images, but take longer.

- disable_safety_checker

- Disable safety checker for generated images.

Output Schema

Output

Example Execution Logs

2025-01-09 08:58:00.204 | DEBUG | fp8.lora_loading:apply_lora_to_model:574 - Extracting keys 2025-01-09 08:58:00.205 | DEBUG | fp8.lora_loading:apply_lora_to_model:581 - Keys extracted Applying LoRA: 0%| | 0/304 [00:00<?, ?it/s] Applying LoRA: 100%|██████████| 304/304 [00:00<00:00, 12793.16it/s] 2025-01-09 08:58:00.229 | SUCCESS | fp8.lora_loading:unload_loras:564 - LoRAs unloaded in 0.025s free=29437839749120 Downloading weights 2025-01-09T08:58:00Z | INFO | [ Initiating ] chunk_size=150M dest=/tmp/tmpajei2m2m/weights url=https://replicate.delivery/yhqm/xVO7sOKzQfQGCafYTszp5DkQLW7Y7Z3Z1YJPWnrHRp3GiRoTA/trained_model.tar 2025-01-09T08:58:07Z | INFO | [ Complete ] dest=/tmp/tmpajei2m2m/weights size="172 MB" total_elapsed=7.167s url=https://replicate.delivery/yhqm/xVO7sOKzQfQGCafYTszp5DkQLW7Y7Z3Z1YJPWnrHRp3GiRoTA/trained_model.tar Downloaded weights in 7.19s 2025-01-09 08:58:07.422 | INFO | fp8.lora_loading:convert_lora_weights:498 - Loading LoRA weights for /src/weights-cache/6a0991c03b8b17b0 2025-01-09 08:58:07.493 | INFO | fp8.lora_loading:convert_lora_weights:519 - LoRA weights loaded 2025-01-09 08:58:07.494 | DEBUG | fp8.lora_loading:apply_lora_to_model:574 - Extracting keys 2025-01-09 08:58:07.494 | DEBUG | fp8.lora_loading:apply_lora_to_model:581 - Keys extracted Applying LoRA: 0%| | 0/304 [00:00<?, ?it/s] Applying LoRA: 39%|███▉ | 119/304 [00:00<00:00, 1186.30it/s] Applying LoRA: 78%|███████▊ | 238/304 [00:00<00:00, 981.50it/s] Applying LoRA: 100%|██████████| 304/304 [00:00<00:00, 965.91it/s] 2025-01-09 08:58:07.809 | SUCCESS | fp8.lora_loading:load_lora:539 - LoRA applied in 0.39s running quantized prediction Using seed: 208448663 0%| | 0/20 [00:00<?, ?it/s] 10%|█ | 2/20 [00:00<00:00, 19.59it/s] 20%|██ | 4/20 [00:00<00:01, 14.64it/s] 30%|███ | 6/20 [00:00<00:01, 13.57it/s] 40%|████ | 8/20 [00:00<00:00, 13.12it/s] 50%|█████ | 10/20 [00:00<00:00, 12.84it/s] 60%|██████ | 12/20 [00:00<00:00, 12.63it/s] 70%|███████ | 14/20 [00:01<00:00, 12.39it/s] 80%|████████ | 16/20 [00:01<00:00, 12.35it/s] 90%|█████████ | 18/20 [00:01<00:00, 12.37it/s] 100%|██████████| 20/20 [00:01<00:00, 12.33it/s] 100%|██████████| 20/20 [00:01<00:00, 12.79it/s] Total safe images: 1 out of 1

Version Details

- Version ID

06766d910c3bb32a720d5a815761c3635150232b84d6c6a6b0a300d0246c19bd- Version Created

- October 19, 2024