lucataco/nsfw_video_detection

Detect NSFW content in videos. Accepts a video input and classifies it as 'nsfw' or 'normal' for automated content moder...

Found 18 models (showing 1-18)

Detect NSFW content in videos. Accepts a video input and classifies it as 'nsfw' or 'normal' for automated content moder...

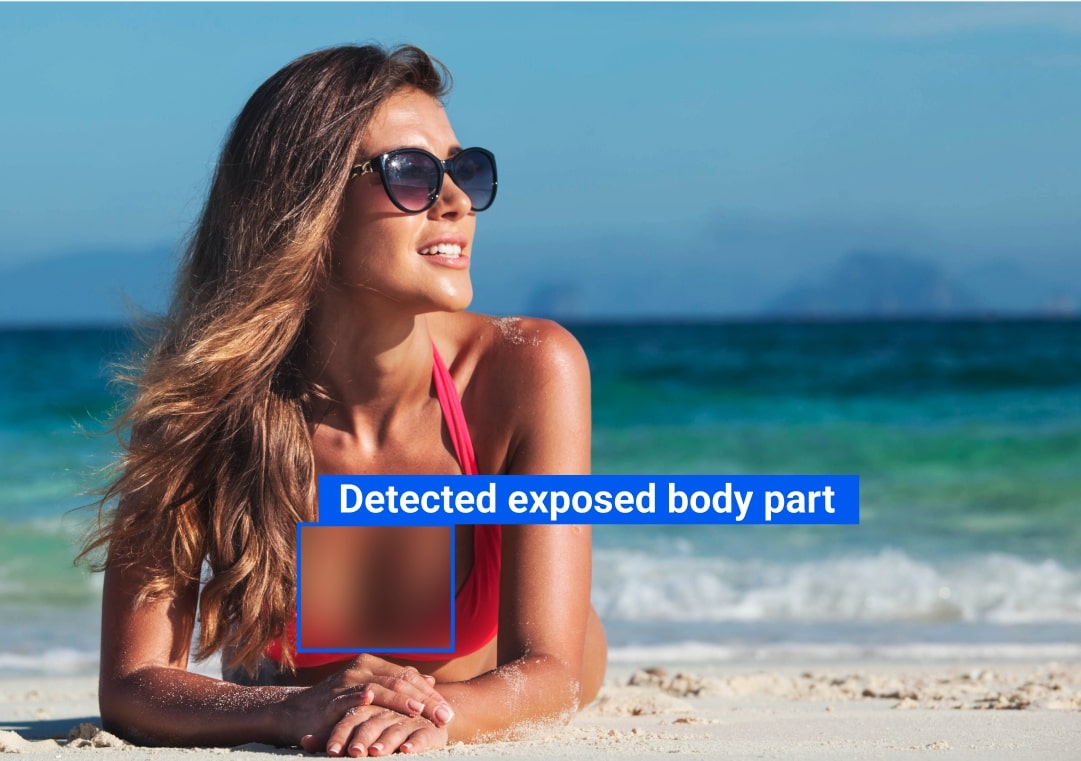

Detect NSFW content in images, returning a binary label ('normal' or 'nsfw'). Accepts a single image as input and output...

Detect NSFW content in images for moderation. Accepts an image and returns JSON classifications with boolean flags and c...

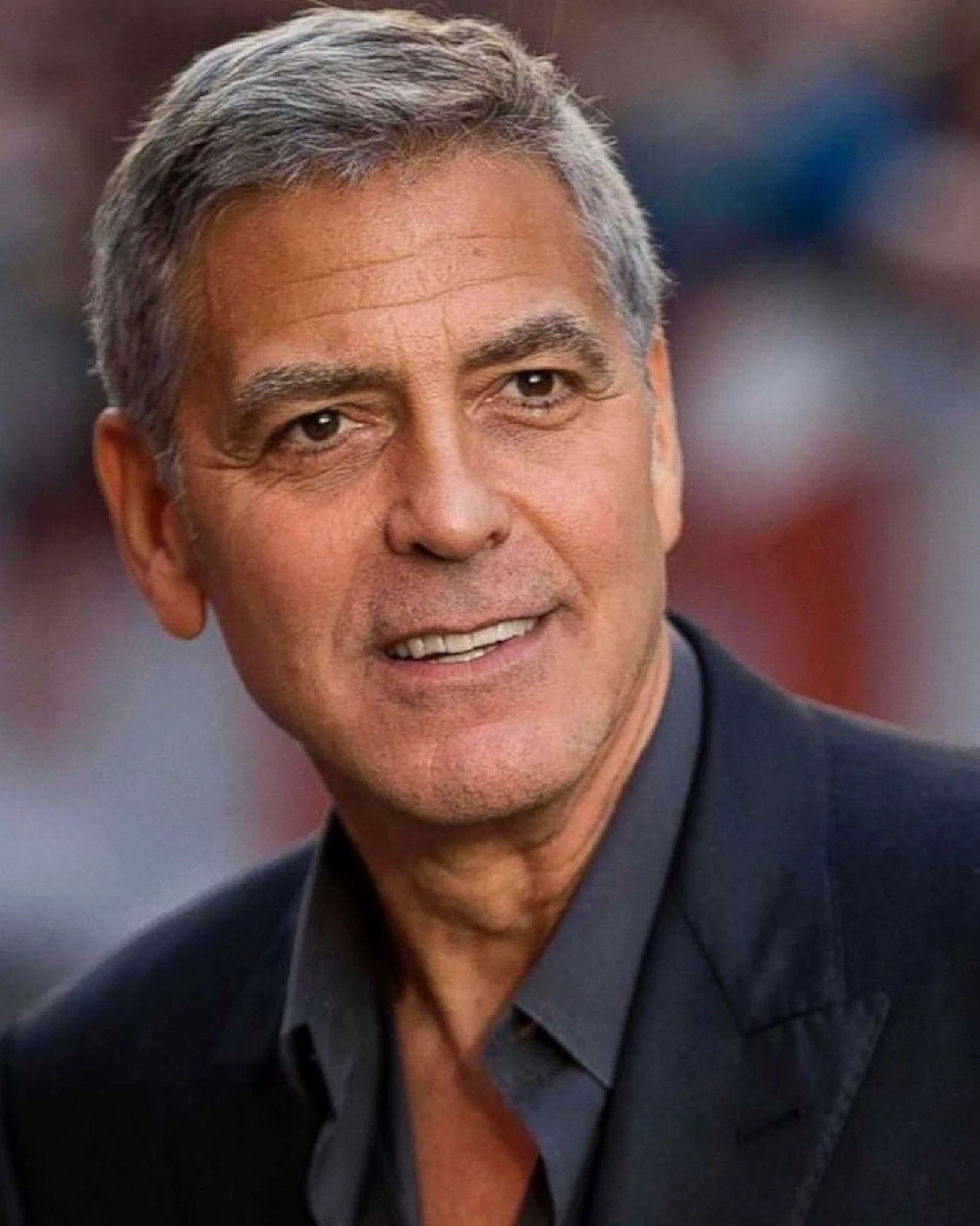

Moderate images and text prompts by detecting NSFW content, public figures, and copyright risks. Accepts an image and/or...

Classify text prompts for text-to-image systems and return a safety score from 0 (safe) to 10 (very NSFW/toxic). Accepts...

Moderate and classify text and images for safety policy compliance. Accept a text prompt and optional multiple images, a...

Moderate LLM prompts and responses for safety policy compliance. Accepts a user prompt and/or an assistant reply as text...

Classify text prompts and assistant responses for safety. Accepts a user message and/or assistant reply and outputs a sa...

Detect hate speech and toxic content in text. Accepts a text string and returns JSON scores for toxicity, severe_toxicit...

Classify images for policy violations across sexually_explicit, dangerous_content, and violence_gore categories. Takes a...

Classify the safety of multimodal inputs (image and user message) for content moderation. Accepts an image (required) an...

Detect NSFW content in images. Accepts an image and outputs a safety label indicating whether the content is safe or uns...

Detect NSFW content in images. Takes an image and returns a moderation result with nsfw_detected (boolean), a list of NS...

Moderate text prompts and assistant responses for safety and policy compliance. Accepts a user message (prompt) and/or a...

Moderate images for safety and policy compliance. Takes an image input (optionally a custom prompt) and outputs structur...

Detect NSFW content in images and compare results across two classifiers. Takes an image input and returns JSON safety f...

Classify text against a custom safety policy. Provide a plain‑English policy and a text input, and receive a structured...

Classify text against custom safety policies with rationale. Accepts a plain‑English policy and a text input, and return...