🤖 Model 🖼️

fofr/nsfw-model-comparison

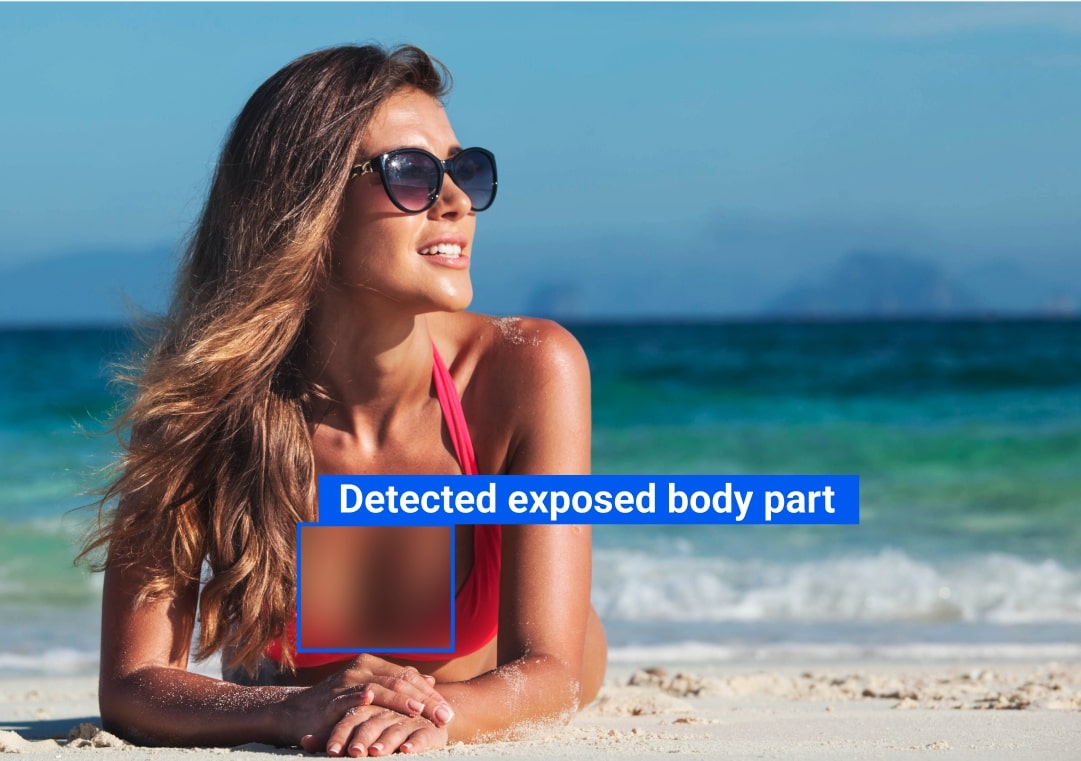

Detect NSFW content in images and compare results across two classifiers. Takes an image input and returns JSON safety f...

Found 4 models (showing 1-4)

Detect NSFW content in images and compare results across two classifiers. Takes an image input and returns JSON safety f...

Moderate images for safety and policy compliance. Takes an image input (optionally a custom prompt) and outputs structur...

Classify images for policy violations across sexually_explicit, dangerous_content, and violence_gore categories. Takes a...

Detect NSFW content in images for moderation. Accepts an image and returns JSON classifications with boolean flags and c...