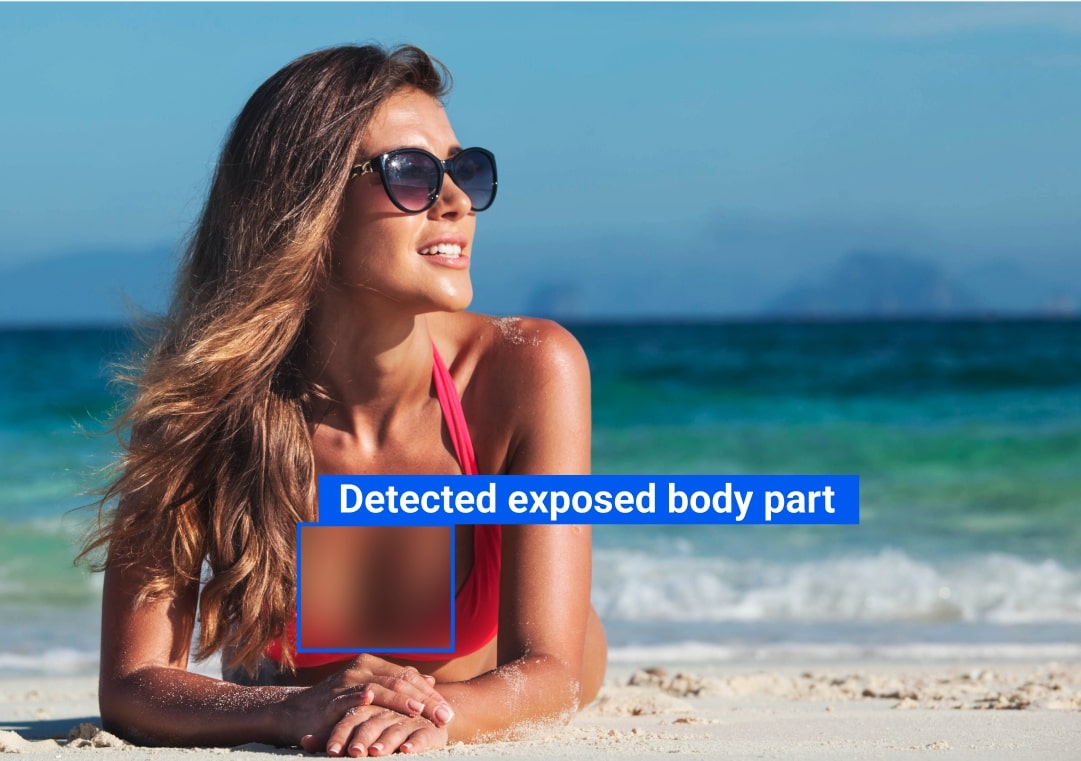

m1guelpf/nsfw-filter

Detect NSFW content in images. Takes an image and returns a moderation result with nsfw_detected (boolean), a list of NS...

Found 11 models (showing 1-11)

Detect NSFW content in images. Takes an image and returns a moderation result with nsfw_detected (boolean), a list of NS...

Detect NSFW content in images, returning a binary label ('normal' or 'nsfw'). Accepts a single image as input and output...

Detect NSFW content in images for moderation. Accepts an image and returns JSON classifications with boolean flags and c...

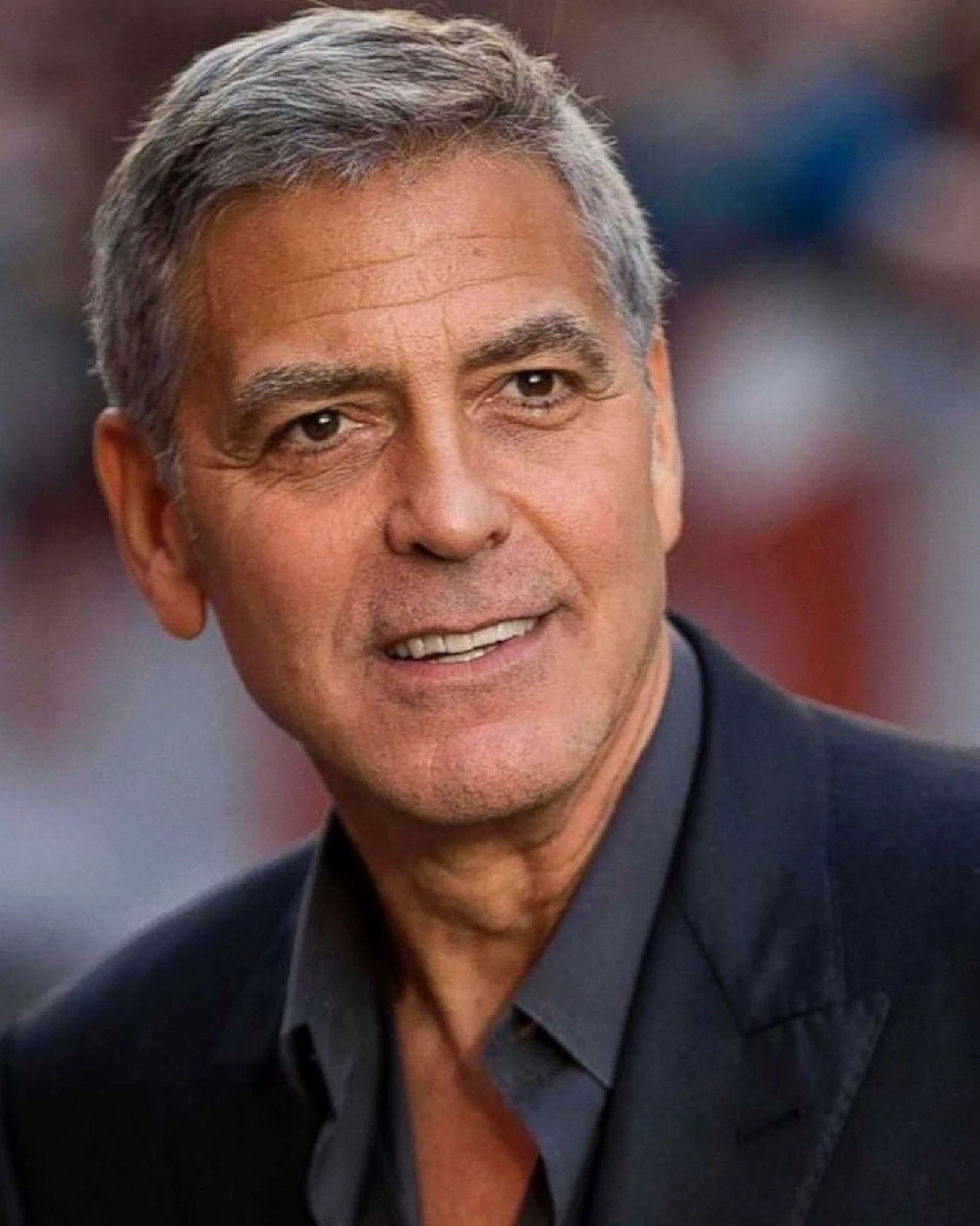

Moderate images and text prompts by detecting NSFW content, public figures, and copyright risks. Accepts an image and/or...

Detect NSFW content in images and compare results across two classifiers. Takes an image input and returns JSON safety f...

Moderate images for safety and policy compliance. Takes an image input (optionally a custom prompt) and outputs structur...

Classify images for policy violations across sexually_explicit, dangerous_content, and violence_gore categories. Takes a...

Classify the safety of multimodal inputs (image and user message) for content moderation. Accepts an image (required) an...

Detect NSFW content in images. Accepts an image and outputs a safety label indicating whether the content is safe or uns...

Moderate and classify text and images for safety policy compliance. Accept a text prompt and optional multiple images, a...

Tag images with general, character, and rating labels from a single image input. Return a list of tags with confidence s...