sabuhigr/sabuhi-model 🖼️❓📝🔢✓ → ❓

About

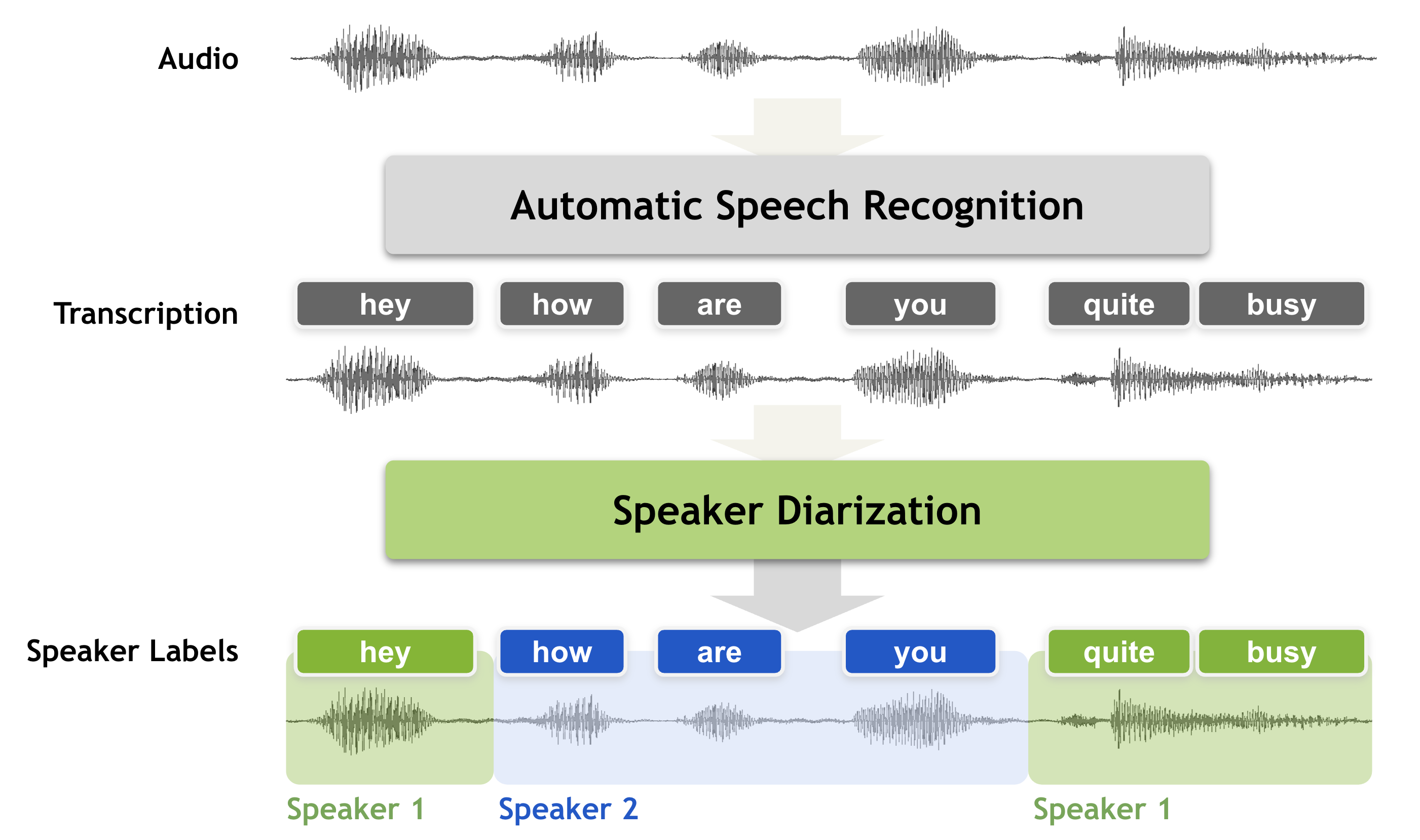

Whisper AI with channel separation and speaker diarization

Example Output

Output

{"segments":[{"end":3.98,"text":" تقع القاهرة على جوانب جزر نهر النيل في شمال مصر","start":0.06,"words":[{"end":0.422,"word":"تقع","score":0.884,"start":0.06},{"end":1.025,"word":"القاهرة","score":0.899,"start":0.442,"speaker":"SPEAKER_00"},{"end":1.306,"word":"على","score":0.874,"start":1.085,"speaker":"SPEAKER_00"},{"end":1.95,"word":"جوانب","score":0.963,"start":1.367,"speaker":"SPEAKER_00"},{"end":2.372,"word":"جزر","score":0.928,"start":2.01,"speaker":"SPEAKER_00"},{"end":2.713,"word":"نهر","score":0.991,"start":2.412,"speaker":"SPEAKER_00"},{"end":3.015,"word":"النيل","score":0.527,"start":2.734,"speaker":"SPEAKER_00"},{"end":3.196,"word":"في","score":0.999,"start":3.075,"speaker":"SPEAKER_00"},{"end":3.678,"word":"شمال","score":0.986,"start":3.276,"speaker":"SPEAKER_00"},{"end":3.98,"word":"مصر","score":0.534,"start":3.759,"speaker":"SPEAKER_00"}],"speaker":"SPEAKER_00"}],"translation":null,"transcription":"[{'start': 0.06, 'end': 3.98, 'text': ' تقع القاهرة على جوانب جزر نهر النيل في شمال مصر', 'words': [{'word': 'تقع', 'start': 0.06, 'end': 0.422, 'score': 0.884}, {'word': 'القاهرة', 'start': 0.442, 'end': 1.025, 'score': 0.899, 'speaker': 'SPEAKER_00'}, {'word': 'على', 'start': 1.085, 'end': 1.306, 'score': 0.874, 'speaker': 'SPEAKER_00'}, {'word': 'جوانب', 'start': 1.367, 'end': 1.95, 'score': 0.963, 'speaker': 'SPEAKER_00'}, {'word': 'جزر', 'start': 2.01, 'end': 2.372, 'score': 0.928, 'speaker': 'SPEAKER_00'}, {'word': 'نهر', 'start': 2.412, 'end': 2.713, 'score': 0.991, 'speaker': 'SPEAKER_00'}, {'word': 'النيل', 'start': 2.734, 'end': 3.015, 'score': 0.527, 'speaker': 'SPEAKER_00'}, {'word': 'في', 'start': 3.075, 'end': 3.196, 'score': 0.999, 'speaker': 'SPEAKER_00'}, {'word': 'شمال', 'start': 3.276, 'end': 3.678, 'score': 0.986, 'speaker': 'SPEAKER_00'}, {'word': 'مصر', 'start': 3.759, 'end': 3.98, 'score': 0.534, 'speaker': 'SPEAKER_00'}], 'speaker': 'SPEAKER_00'}]","detected_language":"arabic"}

Performance Metrics

56.17s

Prediction Time

231.68s

Total Time

All Input Parameters

{

"audio": "https://replicate.delivery/pbxt/J2ZTuLIUqfnWLDBJ8LYX7SmtnSQzdx0RAxPXzh3aKhKMPQhx/test-arabic.wav",

"model": "large-v2",

"hf_token": "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",

"language": "ar",

"max_speakers": "2",

"min_speakers": "2",

"transcription": "plain text",

"suppress_tokens": "-1",

"logprob_threshold": -1,

"no_speech_threshold": 0.6,

"condition_on_previous_text": true,

"compression_ratio_threshold": 2.4,

"temperature_increment_on_fallback": 0.2

}

Input Parameters

- audio (required)

- Audio file

- model

- Choose a Whisper model.

- hf_token (required)

- Your Hugging Face token for speaker diarization

- language (required)

- language spoken in the audio, specify None to perform language detection

- patience

- optional patience value to use in beam decoding, as in https://arxiv.org/abs/2204.05424, the default (1.0) is equivalent to conventional beam search

- translate

- Translate the text to English when set to True

- temperature

- temperature to use for sampling

- max_speakers

- Select 2 if record is stereo, 1 if is mono.Default is 1 for mono records

- min_speakers

- Select 2 if record is stereo, 1 if is mono.Default is 1 for mono records

- transcription

- Choose the format for the transcription

- initial_prompt

- optional text to provide as a prompt for the first window.

- suppress_tokens

- comma-separated list of token ids to suppress during sampling; '-1' will suppress most special characters except common punctuations

- logprob_threshold

- if the average log probability is lower than this value, treat the decoding as failed

- no_speech_threshold

- if the probability of the <|nospeech|> token is higher than this value AND the decoding has failed due to `logprob_threshold`, consider the segment as silence

- condition_on_previous_text

- if True, provide the previous output of the model as a prompt for the next window; disabling may make the text inconsistent across windows, but the model becomes less prone to getting stuck in a failure loop

- compression_ratio_threshold

- if the gzip compression ratio is higher than this value, treat the decoding as failed

- temperature_increment_on_fallback

- temperature to increase when falling back when the decoding fails to meet either of the thresholds below

Output Schema

Output

Example Execution Logs

Transcribe with large-v2 model

0%| | 0/439 [00:00<?, ?frames/s]

100%|██████████| 439/439 [00:16<00:00, 25.94frames/s]

100%|██████████| 439/439 [00:16<00:00, 25.94frames/s]

Downloading (…)rocessor_config.json: 0%| | 0.00/158 [00:00<?, ?B/s]

Downloading (…)rocessor_config.json: 100%|██████████| 158/158 [00:00<00:00, 49.9kB/s]

Downloading (…)lve/main/config.json: 0.00B [00:00, ?B/s]

Downloading (…)lve/main/config.json: 1.56kB [00:00, 2.80MB/s]

Downloading (…)olve/main/vocab.json: 0%| | 0.00/507 [00:00<?, ?B/s]

Downloading (…)olve/main/vocab.json: 100%|██████████| 507/507 [00:00<00:00, 268kB/s]

Downloading (…)cial_tokens_map.json: 0%| | 0.00/85.0 [00:00<?, ?B/s]

Downloading (…)cial_tokens_map.json: 100%|██████████| 85.0/85.0 [00:00<00:00, 47.2kB/s]

Downloading pytorch_model.bin: 0%| | 0.00/1.26G [00:00<?, ?B/s]

Downloading pytorch_model.bin: 1%| | 10.5M/1.26G [00:00<00:22, 55.3MB/s]

Downloading pytorch_model.bin: 2%|▏ | 21.0M/1.26G [00:00<00:19, 64.5MB/s]

Downloading pytorch_model.bin: 2%|▏ | 31.5M/1.26G [00:00<00:18, 68.0MB/s]

Downloading pytorch_model.bin: 3%|▎ | 41.9M/1.26G [00:00<00:28, 43.0MB/s]

Downloading pytorch_model.bin: 5%|▍ | 62.9M/1.26G [00:01<00:21, 56.0MB/s]

Downloading pytorch_model.bin: 7%|▋ | 83.9M/1.26G [00:01<00:18, 63.4MB/s]

Downloading pytorch_model.bin: 7%|▋ | 94.4M/1.26G [00:01<00:18, 64.7MB/s]

Downloading pytorch_model.bin: 8%|▊ | 105M/1.26G [00:01<00:22, 50.4MB/s]

Downloading pytorch_model.bin: 9%|▉ | 115M/1.26G [00:02<00:25, 44.4MB/s]

Downloading pytorch_model.bin: 11%|█ | 136M/1.26G [00:02<00:18, 60.8MB/s]

Downloading pytorch_model.bin: 12%|█▏ | 157M/1.26G [00:02<00:15, 73.4MB/s]

Downloading pytorch_model.bin: 14%|█▍ | 178M/1.26G [00:02<00:13, 80.9MB/s]

Downloading pytorch_model.bin: 15%|█▍ | 189M/1.26G [00:03<00:22, 48.6MB/s]

Downloading pytorch_model.bin: 16%|█▌ | 199M/1.26G [00:03<00:20, 53.0MB/s]

Downloading pytorch_model.bin: 17%|█▋ | 210M/1.26G [00:03<00:21, 48.4MB/s]

Downloading pytorch_model.bin: 18%|█▊ | 231M/1.26G [00:04<00:18, 56.4MB/s]

Downloading pytorch_model.bin: 20%|█▉ | 252M/1.26G [00:04<00:16, 60.9MB/s]

Downloading pytorch_model.bin: 22%|██▏ | 273M/1.26G [00:04<00:17, 55.3MB/s]

Downloading pytorch_model.bin: 22%|██▏ | 283M/1.26G [00:05<00:19, 49.1MB/s]

Downloading pytorch_model.bin: 24%|██▍ | 304M/1.26G [00:05<00:18, 52.2MB/s]

Downloading pytorch_model.bin: 26%|██▌ | 325M/1.26G [00:05<00:15, 60.6MB/s]

Downloading pytorch_model.bin: 27%|██▋ | 336M/1.26G [00:05<00:14, 62.4MB/s]

Downloading pytorch_model.bin: 27%|██▋ | 346M/1.26G [00:06<00:16, 57.0MB/s]

Downloading pytorch_model.bin: 29%|██▉ | 367M/1.26G [00:06<00:19, 45.8MB/s]

Downloading pytorch_model.bin: 30%|██▉ | 377M/1.26G [00:06<00:20, 43.9MB/s]

Downloading pytorch_model.bin: 31%|███ | 388M/1.26G [00:07<00:19, 45.5MB/s]

Downloading pytorch_model.bin: 32%|███▏ | 398M/1.26G [00:07<00:22, 38.3MB/s]

Downloading pytorch_model.bin: 32%|███▏ | 409M/1.26G [00:07<00:18, 45.2MB/s]

Downloading pytorch_model.bin: 33%|███▎ | 419M/1.26G [00:07<00:17, 47.6MB/s]

Downloading pytorch_model.bin: 35%|███▍ | 440M/1.26G [00:08<00:15, 52.2MB/s]

Downloading pytorch_model.bin: 36%|███▌ | 451M/1.26G [00:08<00:14, 55.4MB/s]

Downloading pytorch_model.bin: 37%|███▋ | 461M/1.26G [00:08<00:20, 39.1MB/s]

Downloading pytorch_model.bin: 37%|███▋ | 472M/1.26G [00:09<00:20, 38.7MB/s]

Downloading pytorch_model.bin: 38%|███▊ | 482M/1.26G [00:09<00:18, 41.4MB/s]

Downloading pytorch_model.bin: 39%|███▉ | 493M/1.26G [00:09<00:20, 37.3MB/s]

Downloading pytorch_model.bin: 41%|████ | 514M/1.26G [00:09<00:14, 52.0MB/s]

Downloading pytorch_model.bin: 42%|████▏ | 535M/1.26G [00:10<00:13, 52.2MB/s]

Downloading pytorch_model.bin: 44%|████▍ | 556M/1.26G [00:10<00:12, 55.4MB/s]

Downloading pytorch_model.bin: 45%|████▍ | 566M/1.26G [00:10<00:14, 49.6MB/s]

Downloading pytorch_model.bin: 47%|████▋ | 587M/1.26G [00:11<00:11, 59.6MB/s]

Downloading pytorch_model.bin: 48%|████▊ | 608M/1.26G [00:11<00:11, 56.8MB/s]

Downloading pytorch_model.bin: 49%|████▉ | 619M/1.26G [00:11<00:11, 55.3MB/s]

Downloading pytorch_model.bin: 50%|████▉ | 629M/1.26G [00:12<00:12, 49.6MB/s]

Downloading pytorch_model.bin: 52%|█████▏ | 650M/1.26G [00:12<00:11, 54.0MB/s]

Downloading pytorch_model.bin: 52%|█████▏ | 661M/1.26G [00:12<00:11, 50.5MB/s]

Downloading pytorch_model.bin: 53%|█████▎ | 671M/1.26G [00:12<00:10, 53.9MB/s]

Downloading pytorch_model.bin: 54%|█████▍ | 682M/1.26G [00:13<00:13, 42.6MB/s]

Downloading pytorch_model.bin: 55%|█████▍ | 692M/1.26G [00:13<00:11, 49.0MB/s]

Downloading pytorch_model.bin: 56%|█████▌ | 703M/1.26G [00:13<00:11, 49.7MB/s]

Downloading pytorch_model.bin: 57%|█████▋ | 724M/1.26G [00:13<00:08, 62.0MB/s]

Downloading pytorch_model.bin: 59%|█████▉ | 744M/1.26G [00:14<00:10, 49.9MB/s]

Downloading pytorch_model.bin: 60%|█████▉ | 755M/1.26G [00:14<00:10, 49.9MB/s]

Downloading pytorch_model.bin: 61%|██████▏ | 776M/1.26G [00:14<00:08, 58.3MB/s]

Downloading pytorch_model.bin: 62%|██████▏ | 786M/1.26G [00:15<00:08, 54.3MB/s]

Downloading pytorch_model.bin: 63%|██████▎ | 797M/1.26G [00:15<00:11, 41.2MB/s]

Downloading pytorch_model.bin: 64%|██████▍ | 807M/1.26G [00:15<00:10, 42.4MB/s]

Downloading pytorch_model.bin: 65%|██████▍ | 818M/1.26G [00:16<00:13, 33.7MB/s]

Downloading pytorch_model.bin: 66%|██████▋ | 839M/1.26G [00:16<00:10, 41.1MB/s]

Downloading pytorch_model.bin: 67%|██████▋ | 849M/1.26G [00:16<00:10, 40.7MB/s]

Downloading pytorch_model.bin: 68%|██████▊ | 860M/1.26G [00:17<00:10, 39.5MB/s]

Downloading pytorch_model.bin: 69%|██████▉ | 870M/1.26G [00:17<00:09, 39.7MB/s]

Downloading pytorch_model.bin: 71%|███████ | 891M/1.26G [00:17<00:07, 51.5MB/s]

Downloading pytorch_model.bin: 72%|███████▏ | 912M/1.26G [00:18<00:08, 43.1MB/s]

Downloading pytorch_model.bin: 73%|███████▎ | 923M/1.26G [00:18<00:07, 44.5MB/s]

Downloading pytorch_model.bin: 74%|███████▍ | 933M/1.26G [00:18<00:07, 41.7MB/s]

Downloading pytorch_model.bin: 75%|███████▍ | 944M/1.26G [00:19<00:08, 39.5MB/s]

Downloading pytorch_model.bin: 76%|███████▌ | 954M/1.26G [00:19<00:06, 45.4MB/s]

Downloading pytorch_model.bin: 76%|███████▋ | 965M/1.26G [00:19<00:06, 45.8MB/s]

Downloading pytorch_model.bin: 78%|███████▊ | 986M/1.26G [00:19<00:05, 48.4MB/s]

Downloading pytorch_model.bin: 79%|███████▉ | 996M/1.26G [00:20<00:06, 44.1MB/s]

Downloading pytorch_model.bin: 80%|███████▉ | 1.01G/1.26G [00:20<00:06, 40.3MB/s]

Downloading pytorch_model.bin: 81%|████████▏ | 1.03G/1.26G [00:20<00:04, 49.5MB/s]

Downloading pytorch_model.bin: 82%|████████▏ | 1.04G/1.26G [00:21<00:04, 46.9MB/s]

Downloading pytorch_model.bin: 84%|████████▍ | 1.06G/1.26G [00:21<00:03, 54.2MB/s]

Downloading pytorch_model.bin: 86%|████████▌ | 1.08G/1.26G [00:21<00:03, 55.6MB/s]

Downloading pytorch_model.bin: 87%|████████▋ | 1.10G/1.26G [00:21<00:02, 65.0MB/s]

Downloading pytorch_model.bin: 89%|████████▉ | 1.12G/1.26G [00:22<00:02, 50.5MB/s]

Downloading pytorch_model.bin: 90%|████████▉ | 1.13G/1.26G [00:22<00:03, 40.7MB/s]

Downloading pytorch_model.bin: 91%|█████████ | 1.14G/1.26G [00:23<00:02, 43.8MB/s]

Downloading pytorch_model.bin: 91%|█████████▏| 1.15G/1.26G [00:23<00:02, 43.3MB/s]

Downloading pytorch_model.bin: 93%|█████████▎| 1.17G/1.26G [00:23<00:02, 42.7MB/s]

Downloading pytorch_model.bin: 95%|█████████▍| 1.20G/1.26G [00:24<00:01, 42.3MB/s]

Downloading pytorch_model.bin: 96%|█████████▋| 1.22G/1.26G [00:24<00:00, 51.3MB/s]

Downloading pytorch_model.bin: 97%|█████████▋| 1.23G/1.26G [00:24<00:00, 48.4MB/s]

Downloading pytorch_model.bin: 99%|█████████▉| 1.25G/1.26G [00:25<00:00, 51.9MB/s]

Downloading pytorch_model.bin: 100%|██████████| 1.26G/1.26G [00:25<00:00, 49.8MB/s]

Downloading (…)olve/2.1/config.yaml: 0%| | 0.00/500 [00:00<?, ?B/s]

Downloading (…)olve/2.1/config.yaml: 100%|██████████| 500/500 [00:00<00:00, 67.2kB/s]

Downloading pytorch_model.bin: 0%| | 0.00/17.7M [00:00<?, ?B/s]

Downloading pytorch_model.bin: 59%|█████▉ | 10.5M/17.7M [00:00<00:00, 39.2MB/s]

Downloading pytorch_model.bin: 100%|██████████| 17.7M/17.7M [00:00<00:00, 56.2MB/s]

Downloading (…)/2022.07/config.yaml: 0%| | 0.00/318 [00:00<?, ?B/s]

Downloading (…)/2022.07/config.yaml: 100%|██████████| 318/318 [00:00<00:00, 164kB/s]

Lightning automatically upgraded your loaded checkpoint from v1.5.4 to v2.0.3. To apply the upgrade to your files permanently, run `python -m pytorch_lightning.utilities.upgrade_checkpoint --file ../root/.cache/torch/pyannote/models--pyannote--segmentation/snapshots/c4c8ceafcbb3a7a280c2d357aee9fbc9b0be7f9b/pytorch_model.bin`

Model was trained with pyannote.audio 0.0.1, yours is 2.1.1. Bad things might happen unless you revert pyannote.audio to 0.x.

Model was trained with torch 1.10.0+cu102, yours is 2.0.0+cu117. Bad things might happen unless you revert torch to 1.x.

Downloading (…)ain/hyperparams.yaml: 0.00B [00:00, ?B/s]

Downloading (…)ain/hyperparams.yaml: 1.92kB [00:00, 3.93MB/s]

Downloading embedding_model.ckpt: 0%| | 0.00/83.3M [00:00<?, ?B/s]

Downloading embedding_model.ckpt: 38%|███▊ | 31.5M/83.3M [00:00<00:00, 226MB/s]

Downloading embedding_model.ckpt: 76%|███████▌ | 62.9M/83.3M [00:00<00:00, 215MB/s]

Downloading embedding_model.ckpt: 100%|██████████| 83.3M/83.3M [00:00<00:00, 209MB/s]

Downloading (…)an_var_norm_emb.ckpt: 0%| | 0.00/1.92k [00:00<?, ?B/s]

Downloading (…)an_var_norm_emb.ckpt: 100%|██████████| 1.92k/1.92k [00:00<00:00, 1.03MB/s]

Downloading classifier.ckpt: 0%| | 0.00/5.53M [00:00<?, ?B/s]

Downloading classifier.ckpt: 100%|██████████| 5.53M/5.53M [00:00<00:00, 179MB/s]

Downloading (…)in/label_encoder.txt: 0.00B [00:00, ?B/s]

Downloading (…)in/label_encoder.txt: 129kB [00:00, 66.6MB/s]

0 1 ... intersection union

0 [ 00:00:00.497 --> 00:00:04.345] A ... 0.221 3.8475

[1 rows x 7 columns]

[{'start': 0.06, 'end': 3.98, 'text': ' تقع القاهرة على جوانب جزر نهر النيل في شمال مصر', 'words': [{'word': 'تقع', 'start': 0.06, 'end': 0.422, 'score': 0.884}, {'word': 'القاهرة', 'start': 0.442, 'end': 1.025, 'score': 0.899, 'speaker': 'SPEAKER_00'}, {'word': 'على', 'start': 1.085, 'end': 1.306, 'score': 0.874, 'speaker': 'SPEAKER_00'}, {'word': 'جوانب', 'start': 1.367, 'end': 1.95, 'score': 0.963, 'speaker': 'SPEAKER_00'}, {'word': 'جزر', 'start': 2.01, 'end': 2.372, 'score': 0.928, 'speaker': 'SPEAKER_00'}, {'word': 'نهر', 'start': 2.412, 'end': 2.713, 'score': 0.991, 'speaker': 'SPEAKER_00'}, {'word': 'النيل', 'start': 2.734, 'end': 3.015, 'score': 0.527, 'speaker': 'SPEAKER_00'}, {'word': 'في', 'start': 3.075, 'end': 3.196, 'score': 0.999, 'speaker': 'SPEAKER_00'}, {'word': 'شمال', 'start': 3.276, 'end': 3.678, 'score': 0.986, 'speaker': 'SPEAKER_00'}, {'word': 'مصر', 'start': 3.759, 'end': 3.98, 'score': 0.534, 'speaker': 'SPEAKER_00'}], 'speaker': 'SPEAKER_00'}]

Version Details

- Version ID

29b6421db707f07d23fbb646c260b1e2754cbf7ccaf081435330a0c2a3544b74- Version Created

- August 3, 2023