openai/clip

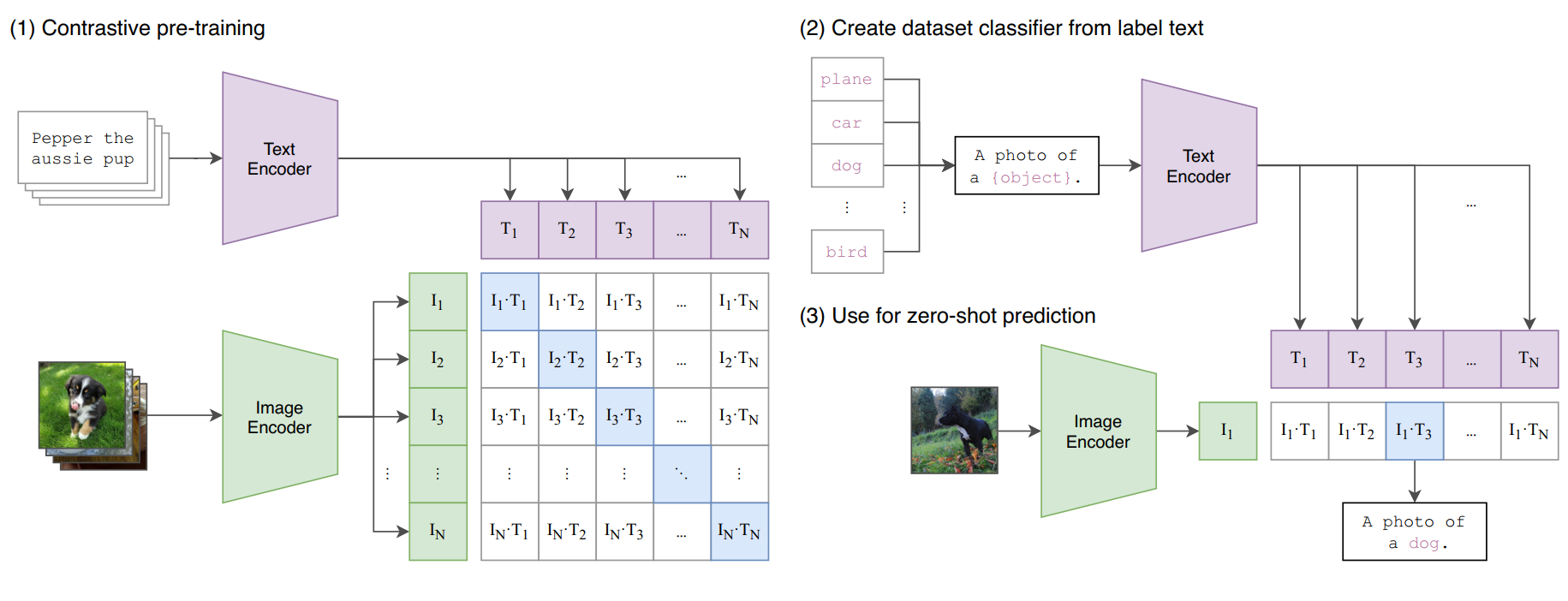

Create 768-dimensional CLIP (ViT-L/14) embeddings from text or images. Embed both modalities into a shared vector space...

Found 11 models (showing 1-11)

Create 768-dimensional CLIP (ViT-L/14) embeddings from text or images. Embed both modalities into a shared vector space...

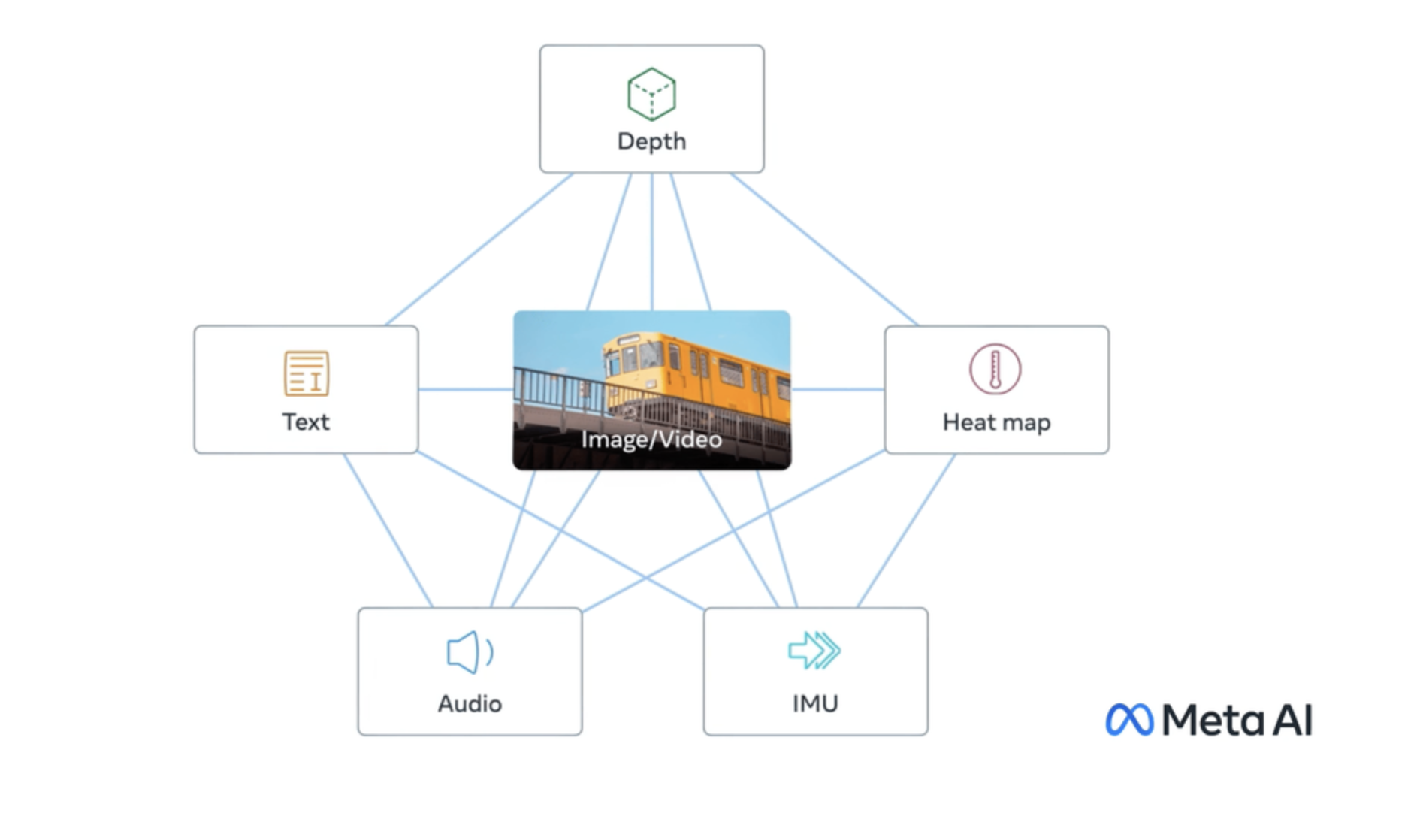

Generate shared embeddings for text, images, and audio for cross-modal retrieval and similarity search. Accepts a text s...

Generate dense embeddings for text queries and document screenshots to power document, PDF, webpage, and slide retrieval...

Generate text and image embeddings for semantic search and cross-modal retrieval. Accepts text or an image and returns a...

Generate CLIP ViT-L/14 embeddings from text and images for cross-modal similarity search and retrieval. Accept text stri...

Create 512-dimensional embeddings for images and text for similarity search, semantic retrieval, and clustering. Accept...

Create multilingual text and image embeddings for cross-modal retrieval and semantic search. Accepts text (up to 8192 to...

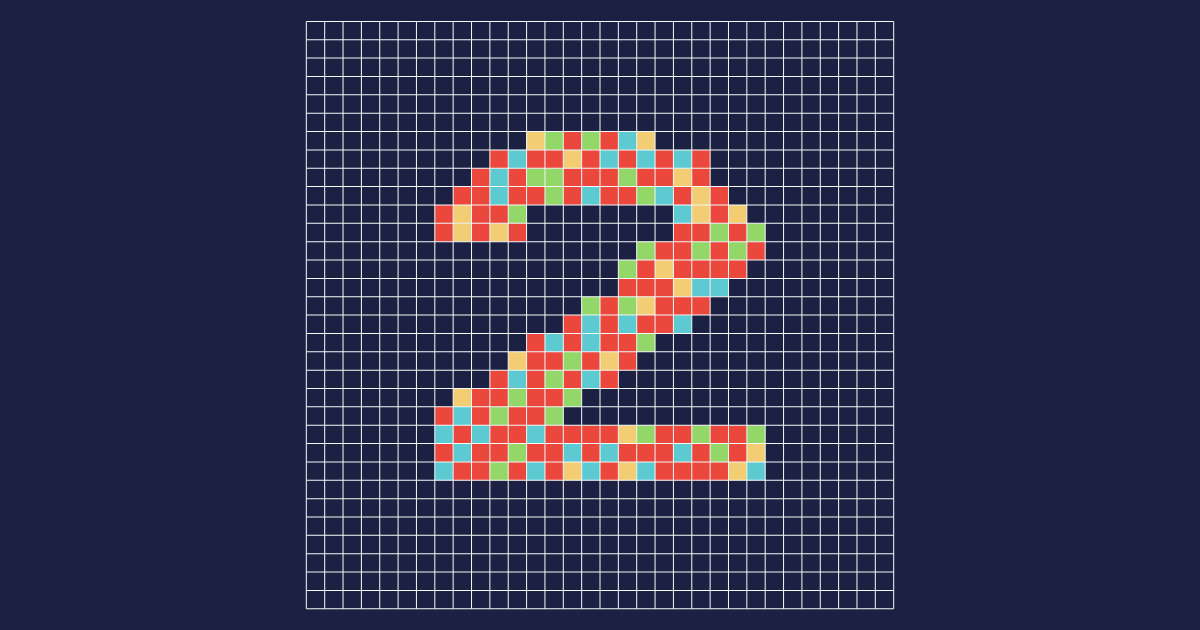

Embed images and text into a shared CLIP vector space for similarity search, cross-modal retrieval, and zero-shot classi...

Generate image embeddings from an input image for use with the Segment Anything Model (SAM) ViT-H. Accepts a single imag...

Compute CLIP embeddings for batches of text and images. Accept multiple newline-separated inputs and return one vector e...

Convert images into vector embeddings for visual similarity search, image retrieval, near-duplicate detection, clusterin...